MCP Knowledge Graph: Contextual Data Insights for Enterprises

Large language models (LLMs) are being adopted across industries to analyze data, automate workflows, and support decision-making. Their value depends on context: without access to the right information, models can produce answers that are incomplete or misleading. Supplying this context has traditionally required custom integrations between enterprise systems and LLMs, an approach that is often complex and expensive.

The Model Context Protocol (MCP) was introduced to address this challenge. MCP defines a standard way for applications to expose data, tools, and services that LLMs can call through a consistent interface. This removes the need for bespoke connectors and gives organizations a secure, scalable framework for making external context available to models.

When we refer to the MCP Knowledge Graph, we mean a graph-based service that participates in this ecosystem. In practice, this is using MCP as the bridge between LLMs and a knowledge graph—similar in spirit to graph RAG, but standardized and interoperable. The graph organizes enterprise data as entities and relationships, making context not only accessible but also connected.

In the sections ahead, we will look at how the MCP Knowledge Graph works, its components, features, benefits, and business use cases, and how PuppyGraph helps bring it to life at enterprise scale.

What is MCP Knowledge Graph

The Model Context Protocol (MCP) defines how large language models connect to external data and services through a standardized client–server pattern. Any system that wants to provide context to an LLM can act as an MCP server, while the LLM or an application functions as the client. This design makes it possible to expose different kinds of context sources like databases, APIs and tools through a unified protocol rather than custom integrations.

An MCP Knowledge Graph is one such service. It is not part of the MCP specification itself, but rather a way of describing how a knowledge graph can be exposed within this ecosystem. In this setup, the graph serves as the context provider. It models enterprise data as entities and relationships, then makes that structure available to LLMs through MCP.

The result is similar to graph RAG (retrieval-augmented generation), but framed within the MCP standard. Instead of feeding models isolated documents or records, the graph delivers structured context: customers linked to orders, assets linked to policies, alerts linked to risks. With this connected view, the model can reason more effectively and provide grounded answers that reflect both facts and their relationships.

Understanding the MCP Framework

The Model Context Protocol (MCP) was introduced to standardize how large language models connect with external systems. Its design follows a client–server model, but with a specific division of roles. The LLM, or an agent acting on its behalf, operates as the MCP client. The external system is not exposed directly—instead, it is wrapped by an MCP server that speaks the protocol.

An MCP server acts as a bridge. It sits in front of a database, API, or tool and translates its functions into a standard interface that clients can call. Communication between client and server follows JSON-RPC, giving both sides a consistent way to exchange requests and responses. The underlying system stays behind the server, while the client only sees the capabilities that the server chooses to expose.

This architecture can be viewed as two layers of client–server interaction. First, the MCP client communicates with the MCP server using the shared protocol. Second, the MCP server interacts with the external service through its native APIs or drivers. From the client’s perspective, all services look uniform, which eliminates the need for one-off connectors and makes it easier to combine multiple context sources into a single workflow.

Core Components of MCP Knowledge Graph

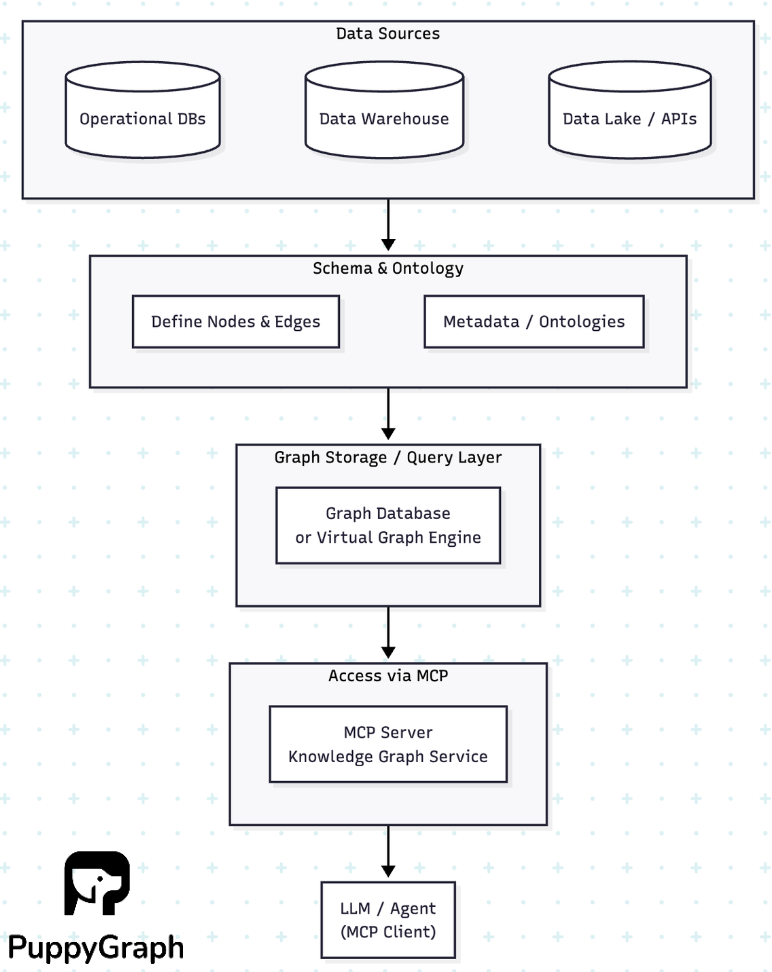

A knowledge graph combines data integration with graph modeling so that information can be understood not just as isolated records, but as connected entities. Its architecture can be thought of in four main parts:

Data Sources

The graph draws from existing systems such as operational databases, data warehouses, data lakes, or APIs. These remain the systems of record; the knowledge graph uses them as inputs rather than replacing them.

Schema and Ontology

A schema defines how raw data is mapped into nodes, edges, and properties. Ontologies and metadata add meaning, ensuring that entities such as customers, assets, or transactions are represented consistently and relationships are clear.

Graph Storage or Query Layer

Traditionally, this role has been filled by a graph database such as Neo4j, Neptune, or TigerGraph. Increasingly, organizations also use virtual graph engines that query data where it already resides. Regardless of the approach, this layer is what executes traversals, path queries, and relationship analytics.

Access and Application Interfaces

The graph must be exposed to applications through query languages or APIs. In the context of the Model Context Protocol, this interface becomes an MCP server. The MCP server presents the graph to LLM clients in a standardized way, returning structured, relationship-rich context whenever it is requested.

By combining these components, an MCP Knowledge Graph acts as a bridge between enterprise data and LLMs. It organizes and models data into connected form, then makes that structure available through MCP so that models can consume and reason over it.

How MCP Knowledge Graph Works

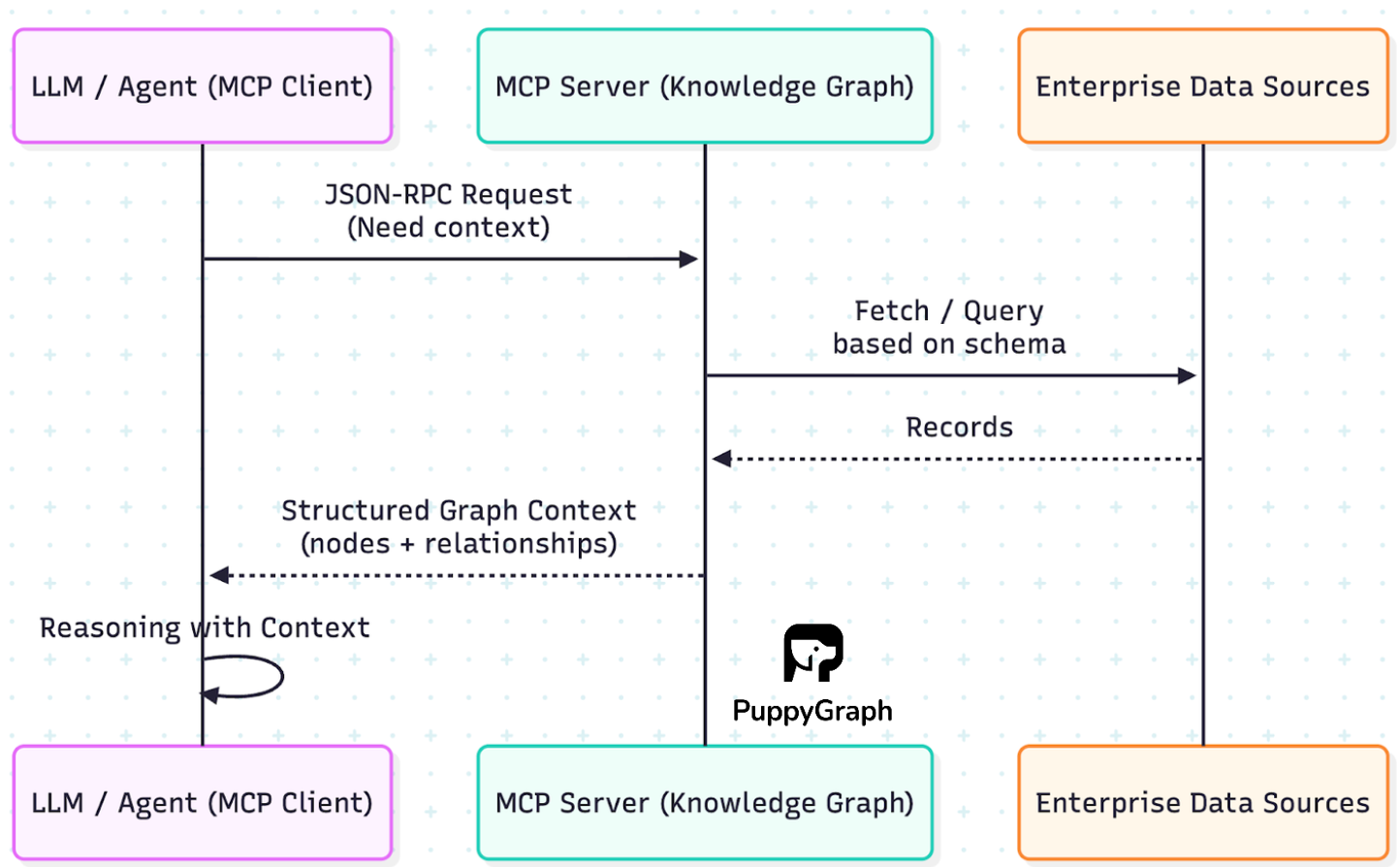

Once the components are in place, the MCP Knowledge Graph operates as a service that makes relationships directly available to LLMs through the protocol. The process can be understood as a simple lifecycle:

- Data connection

The MCP server connects to underlying enterprise systems such as databases, warehouses, or APIs. These remain the source of truth, and no special preparation is required beyond configuring the schema. - Schema mapping

The server defines nodes, edges, and properties using the schema and ontology. This step turns raw records—like users, transactions, or alerts—into entities and relationships. - Query execution

When an LLM (acting as the MCP client) needs context, it issues a request through MCP. The request is expressed in JSON-RPC and passed to the MCP server. The server translates this into a graph query against its storage or virtual engine. - Results delivery

The query results are returned to the MCP client as structured context. Instead of isolated records, the model receives connected information—for example, a customer linked to orders, policies, and associated risks. - Model reasoning

With this structured context, the LLM can generate answers that are grounded in relationships, making outputs more accurate, explainable, and relevant.

Key Features & Capabilities

The value of an MCP Knowledge Graph comes from combining graph modeling with the standardized access that MCP provides. Some of the defining capabilities are:

Context Exposure Through MCP

The knowledge graph is presented as an MCP server. This means LLM clients can call it using the same JSON-RPC protocol they use for other MCP services, without needing custom adapters for graph data.

Unified View Across Services

Because MCP can orchestrate multiple servers, a knowledge graph can sit alongside other services such as search, retrieval, or workflow tools. The graph adds a connected view of entities and relationships that complements those other context sources.

Graph-Aware LLM Queries

Through MCP, an LLM can issue graph queries (for example, “find all suppliers linked to a given product and check their risk scores”) and receive structured results. The model doesn’t need to know the underlying query language; MCP abstracts that interaction into a standard request–response pattern.

Security and Governance Integration

MCP servers enforce what is exposed and how. By wrapping the knowledge graph in MCP, enterprises can ensure that only approved graph views and functions are made available to the model, aligned with existing governance practices.

Composable Context

The MCP Knowledge Graph is not isolated. Its results can be combined with those from other MCP servers in the same session, giving LLMs richer, multi-source grounding without breaking interoperability.

Benefits of MCP Knowledge Graph

Enterprises invest in knowledge graphs because they make relationships explicit, but the combination with the Model Context Protocol expands that value. Together they offer several important benefits:

Grounded Model Outputs

By exposing a knowledge graph through MCP, LLMs no longer rely only on unstructured text or disconnected records. They can base answers on structured relationships, improving accuracy and reducing hallucinations.

Faster Decision-Making

MCP provides a standard interface to query the graph in real time. This shortens the path from a business question to an answer, as users and applications can surface connected context immediately rather than waiting for custom integrations or manual analysis.

Reduced Integration Costs

Traditionally, connecting a knowledge graph to downstream applications requires bespoke connectors or APIs. MCP eliminates this overhead by making the graph available through the same protocol used for other context sources.

Consistency Across Domains

With MCP, multiple knowledge graphs—customer, supply chain, cybersecurity—can be exposed side by side. This ensures consistent access patterns for LLMs while preserving the ability to tailor each graph to its domain.

Explainability and Compliance

Graph-based results are inherently traceable: a model’s answer can be mapped back to the entities and relationships that supported it. Delivered through MCP, this traceability is available in a controlled, auditable way that aligns with enterprise governance requirements.

Business Use Cases of MCP Knowledge Graph

An MCP Knowledge Graph is not just a technical pattern — it directly supports real-world business needs by making contextual data available to LLMs in a standardized way. Some representative use cases include:

Cybersecurity and Risk Analysis

Security teams often use attack path or threat graphs to model vulnerabilities and incidents. As an MCP server, this graph becomes a live context source for LLM-based assistants. They can explain likely attack paths, surface related alerts, and recommend mitigations grounded in the organization’s own environment.

Fraud Detection and Financial Networks

Financial transactions form complex networks where fraud rings hide. A graph makes hidden connections visible, while MCP provides a standard way for LLMs to query those connections alongside other investigative tools.

Customer 360 and Identity Graphs

Organizations want a complete view of each customer: interactions, purchases, support history, and risk signals. By exposing this graph through MCP, LLMs can answer complex questions such as “Which high-value customers have open support cases linked to unresolved product issues?” without stitching data manually.

Supply Chain Intelligence

Supply chains involve suppliers, shipments, facilities, and risk factors. A knowledge graph can map these dependencies, and MCP makes it accessible to models that monitor disruptions or evaluate alternatives. For example, an LLM could query which suppliers of a critical part are concentrated in a single region, highlighting potential risk.

Knowledge Augmentation for AI

LLMs excel at generating language but lack structured knowledge. Exposing a knowledge graph through MCP provides that grounding. This enables graph-based RAG patterns, where models combine graph traversals with text generation to answer questions more accurately and transparently.

How PuppyGraph Helps

PuppyGraph is a zero-ETL graph query engine that can function as the backend for an MCP Knowledge Graph. Instead of copying data into a graph database, PuppyGraph directly queries existing enterprise systems, such as data warehouses, databases, or lakes, as a graph. Some key capabilities of PuppyGraph include:

- Zero ETL: Query data in place without building or maintaining pipelines.

- Multiple Graph Views: Define different schemas over the same tables, so teams can see the data in ways that match their needs.

- Scalability: Handle petabyte-scale datasets and multi-hop queries in seconds.

- Standards Support: Use Gremlin or openCypher to express graph queries naturally.

These features make PuppyGraph an ideal engine for serving enterprise knowledge graphs at scale.

Besides, we have PuppyGraph MCP server, which makes a knowledge graph available as an MCP-compliant service. It acts as the MCP server, receiving requests from any MCP client and translating them into queries against PuppyGraph. PuppyGraph, in turn, executes those queries directly on enterprise data sources.

In our demo we use Claude Desktop as the MCP client. The client communicates with the PuppyGraph MCP Server over stdio, the standard transport defined by the MCP specification. From the client’s perspective, the graph is just another MCP tool, with commands like puppygraph_query or puppygraph_schema. The server handles the translation into Gremlin or Cypher queries, executes them against PuppyGraph, and returns structured graph results back to the client.

For example, we start a new chat in Claude Desktop and ask: “What is the current graph in PuppyGraph?” Claude Desktop uses the local MCP server we configured. Behind the scenes, it calls the puppygraph_status and puppygraph_schema tools, and returns the available node labels, edge types, and properties. The answer comes back in natural language, but it is grounded in the schema exposed by the PuppyGraph MCP Server.

We can then go further and ask: “Which employees have managed the most orders?” Claude interprets the request, translates it into a Cypher query through the puppygraph_query tool, and sends it via stdio to the PuppyGraph MCP Server. The server runs the query against PuppyGraph, which executes it directly on the underlying data. The results are passed back, and Claude presents a concise, structured answer along with the raw table of customer names and order counts.

This interaction shows how PuppyGraph, when wrapped as an MCP server, becomes a live context provider for LLMs. Instead of hard-coding queries or writing glue code, the model itself can explore the graph, retrieve results, and explain them — all through the standardized MCP interface.

Conclusion

The Model Context Protocol offers a standard way for LLMs to access external data and tools. A knowledge graph fits naturally into this pattern: it organizes information as entities and relationships and exposes that connected view through MCP. This makes it easier for models to ground their reasoning in structured context and explain their answers more clearly.

PuppyGraph makes the idea of an MCP Knowledge Graph practical. Its zero-ETL approach queries existing data directly, while the PuppyGraph MCP Server wraps that capability as an MCP-compliant service. With an MCP client, we can explore and query graphs without custom integration work.

If you are interested in trying this out, explore the forever-free PuppyGraph Developer Edition and PuppyGraph MCP server to start building your own MCP Knowledge Graph, or book a free demo with our team!

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install