TigerGraph vs JanusGraph: Key Differences & Comparison

Graph databases have become foundational infrastructure for modern applications that rely heavily on connected data, real-time recommendations, fraud detection, master data management, cybersecurity analytics, knowledge graphs, telecom network operations, biomedical research, and more. As organizations ingest increasingly complex datasets, the choice of graph database impacts not only performance, but also modeling flexibility, scalability, operational complexity, and total cost of ownership. Two widely discussed options in the enterprise and open-source ecosystem are TigerGraph and JanusGraph.

TigerGraph represents a proprietary, high-performance distributed graph engine engineered for deep link traversal across massive datasets. Its GSQL query language and parallel execution model target enterprises requiring high-throughput analytical workloads. JanusGraph, in contrast, offers an open-source, pluggable, and highly flexible graph engine that integrates with multiple backends such as Cassandra, ScyllaDB, HBase, and Elasticsearch. It relies on Gremlin, a widely adopted traversal language woven into the Apache TinkerPop stack.

This article offers a thorough, multi-dimensional comparison of TigerGraph and JanusGraph, spanning architecture, query languages, storage design, indexing mechanisms, scalability, consistency models, schema flexibility, analytics capability, and operational considerations. It also includes real-world use cases, performance insights, and a feature-by-feature comparison table. Finally, for teams seeking a modern, cloud-native alternative without the complexity of JanusGraph or the vendor lock-in of TigerGraph, the article introduces PuppyGraph as a flexible and high-performance option.

What Is TigerGraph?

TigerGraph is a distributed, massively parallel processing (MPP) native graph database designed to handle deep-link analytics across extremely large datasets. Unlike multi-model engines that treat graphs as an additional feature on top of existing storage systems, TigerGraph was engineered from the ground up as a specialized property graph engine suited for high-throughput traversal and real-time graph computation. Its architecture emphasizes compressed storage, vectorized execution, and distributed parallelism across clusters, enabling complex graph computations such as multi-hop traversals, connected-component detection, recommendation scoring, and fraud ring discovery at scale.

TigerGraph markets itself as a real-time graph analytics system rather than a general-purpose transactional datastore. Its strengths align with workloads involving heavy graph algorithms, large fan-out queries, and dynamic user-level decisioning pipelines that require fast responses from multi-hop graph exploration. Because TigerGraph uses its own language, GSQL, and a proprietary runtime, teams that choose it typically invest in a specialized development and operational stack. However, the performance benefits for certain analytics workloads can be substantial, and TigerGraph is widely adopted in sectors such as fintech, e-commerce, supply chain, healthcare, and telecommunications.

Key Features of TigerGraph

TigerGraph offers a sophisticated suite of features oriented toward high-performance graph analytics. Unlike systems built on top of existing data engines, TigerGraph provides both a native storage layer and a distributed execution framework tightly integrated for traversal-heavy workloads. Below is a sequence of paragraphs detailing its major functional pillars.

TigerGraph’s GSQL query language is one of its defining components. The language aims to balance declarative expressiveness with procedural control, allowing developers to write complex graph algorithms that run directly within the engine. GSQL compiles queries into optimized dataflow operators, enabling significant performance improvements compared to bytecode-interpreted traversal languages.

Another major feature is TigerGraph’s parallel execution model. The system is built on an MPP architecture that partitions the graph across multiple machines, allowing vertex-parallel and edge-parallel strategies to speed up traversals. TigerGraph supports multi-hop queries that can traverse billions of edges in seconds by exploiting this distributed model.

TigerGraph also emphasizes streaming data ingestion, offering connectors to Kafka and batch-loading tools. The ingestion pipeline supports high-throughput ETL operations, combining compression, partial updates, and rebalancing to keep datasets consistent with upstream systems.

On the administrative side, TigerGraph includes a rich set of monitoring tools, including built-in dashboards, cluster health panels, and performance diagnostics. Enterprises can use these capabilities to manage and optimize large-scale deployments across many nodes.

Finally, TigerGraph offers enterprise security features such as role-based access, encryption, LDAP integration, and fine-grained permission models. These are critical for regulated industries and large enterprises adopting the database for mission-critical applications.

What Is JanusGraph?

JanusGraph is an open-source, distributed graph database designed for large-scale transactional and analytical workloads. Originally derived from the TitanDB project, JanusGraph is part of the broader Apache TinkerPop ecosystem and inherits a strong focus on portability, backend extensibility, and interoperability. Unlike TigerGraph, which provides an all-in-one tightly integrated system, JanusGraph is designed as a pluggable layer that operates on top of existing storage technologies such as Apache Cassandra, ScyllaDB, Google Bigtable, HBase, BerkeleyDB, and others.

Because JanusGraph does not define its own query language or computation engine, it relies on Gremlin, the traversal language of Apache TinkerPop. Gremlin works as a functional, step-by-step traversal DSL that supports both OLTP and OLAP graph workloads. With JanusGraph, Gremlin is executed via the Gremlin Server or within in-application traversal pipelines. For larger analytics workloads, JanusGraph can integrate with systems like Spark to run batch graph computations, albeit not as tightly optimized as TigerGraph’s native parallel model.

JanusGraph is frequently selected by engineering teams seeking an open-source, vendor-neutral, and flexible graph architecture. Because it integrates with a wide ecosystem of storage and indexing backends, organizations can tailor deployments based on their operational environment. This flexibility, however, requires expertise in distributed system tuning, and performance varies widely depending on backend configuration and workload patterns.

Key Features of JanusGraph

JanusGraph includes a large collection of features centered around flexibility, extensibility, and open standards. Below are detailed multi-paragraph explanations of its notable capabilities.

One of JanusGraph’s most significant features is its pluggable storage backend architecture. Rather than imposing its own storage layer, JanusGraph allows teams to choose among distributed databases like Cassandra or ScyllaDB for horizontal scalability, HBase for tight integration with Hadoop ecosystems, or BerkeleyDB for lightweight embedded deployments. This makes JanusGraph highly adaptable to different operational ecosystems.

Another central component is its integration with external indexing engines such as Elasticsearch, Solr, and Lucene. These backends power global indexes, full-text search, geospatial queries, numeric range indexing, and more. In contrast to TigerGraph’s built-in indexing, JanusGraph’s approach requires external services, adding flexibility but also operational overhead.

JanusGraph’s adherence to Apache TinkerPop provides strong interoperability. Developers can use Gremlin with multiple graph systems interchangeably, enabling skill reuse and multi-engine pipelines. Gremlin’s stepwise traversal model is expressive, but may become verbose for algorithmic patterns compared to TigerGraph’s GSQL.

The system also offers schema management, allowing users to define property keys, edge labels, vertex labels, and index configurations that map onto backend technical capabilities. Although schema is not strictly required, defining it properly is essential for performance.

Finally, JanusGraph supports clustered deployments with fault tolerance and multi-data-center replication when paired with robust backends like Cassandra or ScyllaDB. Its performance characteristics heavily depend on backend tuning, consistency settings, and index synchronization configurations, making expertise and operational discipline critical.

TigerGraph vs JanusGraph: Feature Comparison

Below is a comprehensive comparison in tabular form.

Which One Is Right for You?

The right choice depends on how your workload aligns with each platform’s strengths:

Transactional vs Analytical

TigerGraph is primarily optimized for analytical graph workloads, particularly large-scale multi-hop traversals, graph algorithms, and iterative, multi-stage computations. Its native distributed parallel engine makes it well suited for use cases such as fraud detection, recommendation systems, and network or path analysis.

JanusGraph supports both real-time transactional traversals and analytical queries through Gremlin, but overall performance is strongly influenced by the chosen storage and indexing backends. It is commonly used for knowledge graphs, metadata management, and data cataloging, where schema flexibility and open-source integration are important.

Scaling Boundaries

TigerGraph scales horizontally using a tightly integrated distributed architecture in which storage and computation scale together, helping maintain predictable performance for traversal-heavy and computation-intensive workloads.

JanusGraph’s scalability is determined by its underlying backend (such as Cassandra, HBase, or ScyllaDB). Characteristics such as read/write throughput, fault tolerance, and global index behavior depend on backend configuration and tuning.

Query Language

TigerGraph uses GSQL, a high-level graph query language designed for expressing complex traversal logic, accumulations, and multi-phase analytical workflows. While it requires learning a TigerGraph-specific language, it provides strong support for advanced graph analytics.

JanusGraph relies on Gremlin from Apache TinkerPop, offering portability across multiple graph systems and strong ecosystem interoperability, though complex algorithmic logic can be more verbose to express.

Cost

TigerGraph deployments, particularly at scale, may require carefully planned cluster resources and enterprise licensing, which can increase total cost of ownership for large analytical workloads.

JanusGraph itself is open source and can leverage existing backend infrastructure, potentially reducing licensing costs, but operational complexity and backend tuning can increase ongoing maintenance effort.

Ecosystem Fit

TigerGraph is best suited for organizations focused on high-performance graph analytics and deep computational pipelines, and that are comfortable operating a dedicated, proprietary graph platform.

JanusGraph is well suited for teams that prioritize open-source flexibility, extensible backend choices, and integration with existing NoSQL and big-data ecosystems.

When to Choose TigerGraph vs JanusGraph

Building on the factors discussed above, the following scenarios illustrate when TigerGraph or JanusGraph is the more suitable choice. TigerGraph is designed for large-scale graph analytics and deep traversal workloads through its tightly integrated graph engine, while JanusGraph emphasizes open-source extensibility and integration with external storage and indexing systems.

Choose TigerGraph When:

- You need efficient execution of multi-hop traversals and analytical queries on large graphs, particularly where parallel computation and distributed execution are important.

- Graph analytics, algorithmic processing, or complex traversal logic are central to your workloads, and you benefit from a query language designed for expressing iterative and multi-stage graph computations.

- Your workloads involve high fan-out or large intermediate result sets, and you prefer an engine optimized for parallel traversal execution rather than relying on external storage-layer optimizations.

- Your team prefers a tightly integrated graph database system, where storage, computation, and query execution are managed within a single platform, reducing the need to operate multiple backend components.

- You are comfortable adopting a commercial, proprietary graph database platform, accepting the associated licensing model and potential long-term dependency on a single vendor.

Choose JanusGraph When:

- Open-source licensing, cloud portability, and backend flexibility are priorities, and you want to deploy JanusGraph on top of existing systems such as Cassandra, ScyllaDB, or HBase.

- Your application focuses on knowledge graphs, metadata management, or schema-rich domains, and benefits from JanusGraph’s support for global graph indexes and optional integration with external full-text search engines.

- Traversal depth is moderate, but schema design flexibility, custom indexing strategies, and integration with the Gremlin ecosystem are important to your use case.

- Your team has strong DevOps and distributed systems experience, enabling you to deploy, tune, and maintain JanusGraph together with its chosen storage and indexing backends.

- You want to leverage existing infrastructure and avoid proprietary vendor lock-in, favoring an ecosystem built entirely on open-source components.

Why Consider PuppyGraph as an Alternative

TigerGraph and JanusGraph each offer distinct strengths. TigerGraph provides a native graph architecture optimized for large-scale, parallel graph analytics, while JanusGraph is open source with pluggable storage and indexing backends. Both approaches, however, require dedicated infrastructure: TigerGraph needs purpose-built graph storage and specialized tooling, whereas JanusGraph demands careful configuration and management of multiple backend systems, which can increase operational complexity and cost.

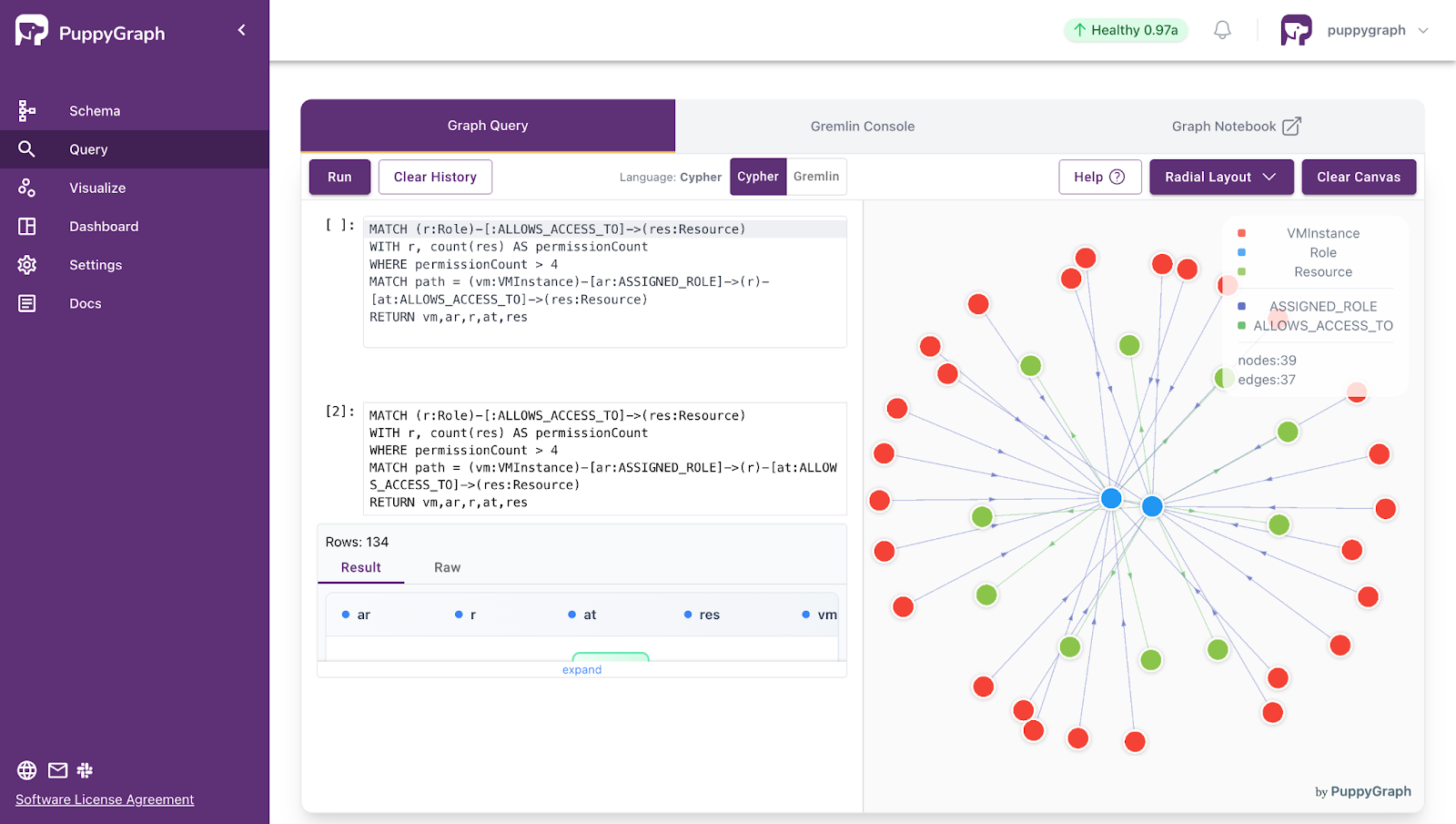

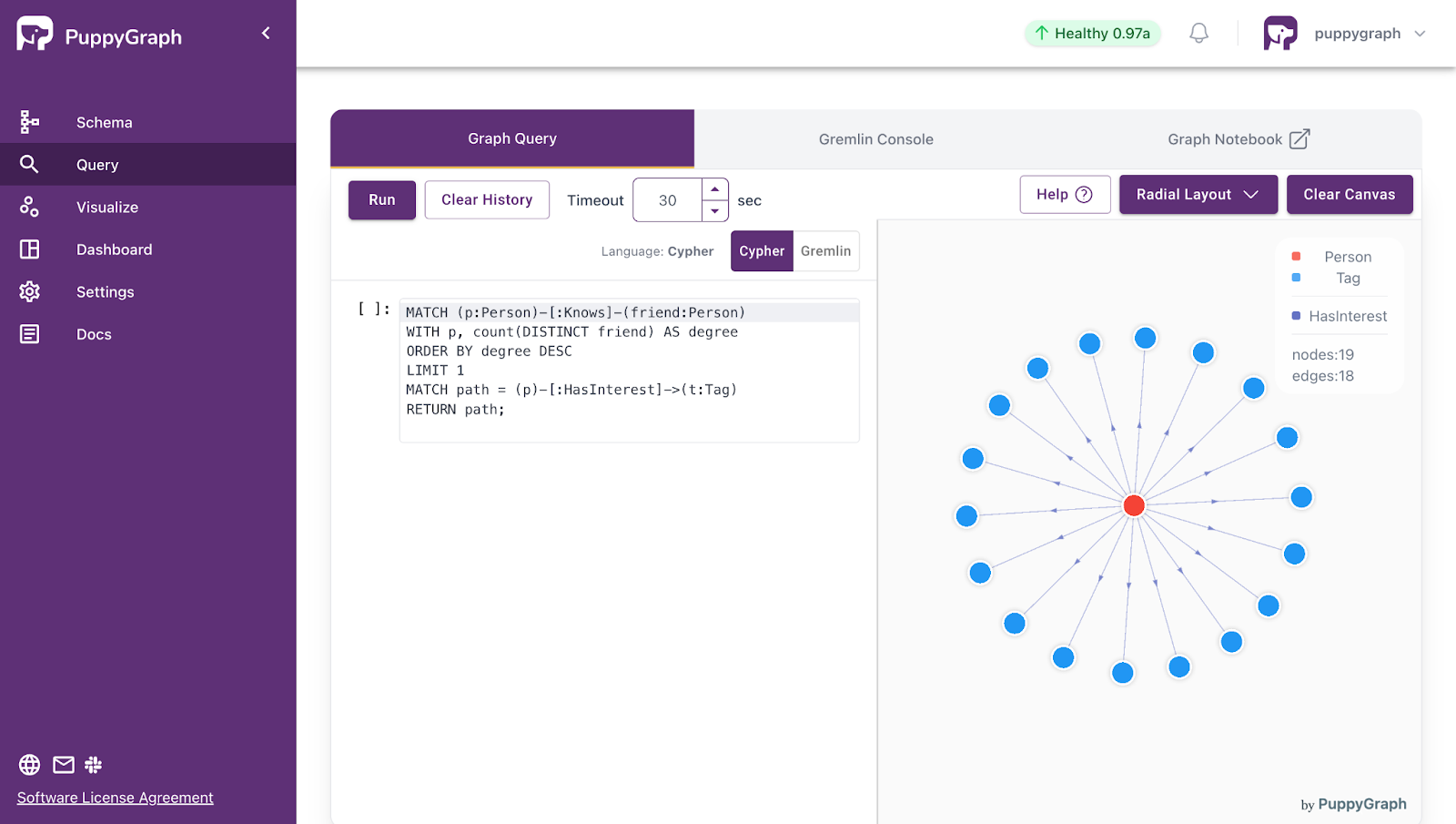

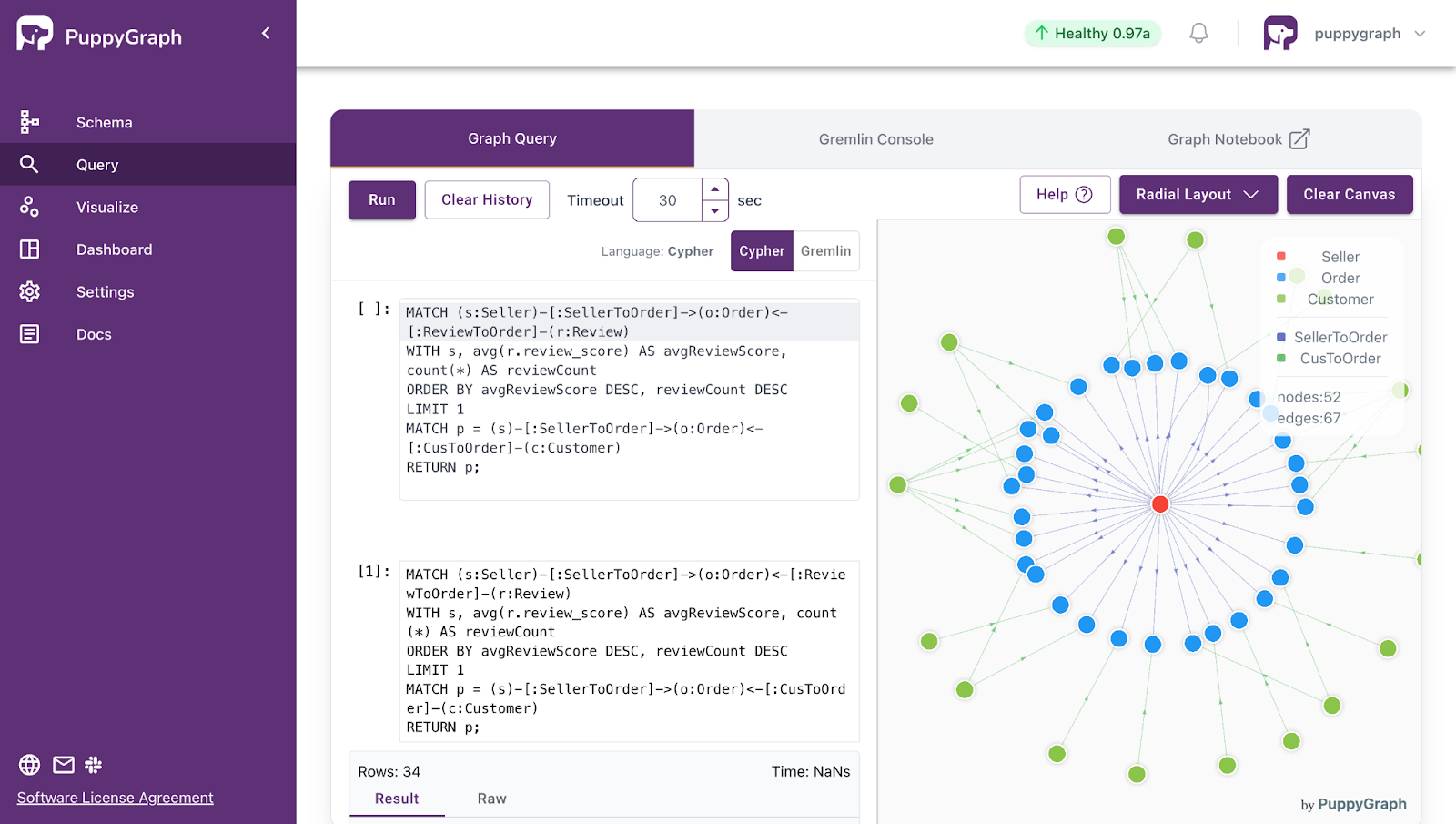

For enterprises with existing relational databases, data warehouses, or lakehouse environments, PuppyGraph offers a different approach. It enables graph queries directly on current data sources without large-scale ETL or separate graph storage. This “zero-ETL” model allows multi-hop graph analysis while leveraging existing infrastructure, providing a balance of performance, simplicity, and operational efficiency. PuppyGraph supports common graph query languages, enabling teams to gain graph insights quickly without duplicating or migrating data.

PuppyGraph is the first and only real time, zero-ETL graph query engine in the market, empowering data teams to query existing relational data stores as a unified graph model that can be deployed in under 10 minutes, bypassing traditional graph databases' cost, latency, and maintenance hurdles.

It seamlessly integrates with data lakes like Apache Iceberg, Apache Hudi, and Delta Lake, as well as databases including MySQL, PostgreSQL, and DuckDB, so you can query across multiple sources simultaneously.

Key PuppyGraph capabilities include:

- Zero ETL: PuppyGraph runs as a query engine on your existing relational databases and lakes. Skip pipeline builds, reduce fragility, and start querying as a graph in minutes.

- No Data Duplication: Query your data in place, eliminating the need to copy large datasets into a separate graph database. This ensures data consistency and leverages existing data access controls.

- Real Time Analysis: By querying live source data, analyses reflect the current state of the environment, mitigating the problem of relying on static, potentially outdated graph snapshots. PuppyGraph users report 6-hop queries across billions of edges in less than 3 seconds.

- Scalable Performance: PuppyGraph’s distributed compute engine scales with your cluster size. Run petabyte-scale workloads and deep traversals like 10-hop neighbors, and get answers back in seconds. This exceptional query performance is achieved through the use of parallel processing and vectorized evaluation technology.

- Best of SQL and Graph: Because PuppyGraph queries your data in place, teams can use their existing SQL engines for tabular workloads and PuppyGraph for relationship-heavy analysis, all on the same source tables. No need to force every use case through a graph database or retrain teams on a new query language.

- Lower Total Cost of Ownership: Graph databases make you pay twice — once for pipelines, duplicated storage, and parallel governance, and again for the high-memory hardware needed to make them fast. PuppyGraph removes both costs by querying your lake directly with zero ETL and no second system to maintain. No massive RAM bills, no duplicated ACLs, and no extra infrastructure to secure.

- Flexible and Iterative Modeling: Using metadata driven schemas allows creating multiple graph views from the same underlying data. Models can be iterated upon quickly without rebuilding data pipelines, supporting agile analysis workflows.

- Standard Querying and Visualization: Support for standard graph query languages (openCypher, Gremlin) and integrated visualization tools helps analysts explore relationships intuitively and effectively.

- Proven at Enterprise Scale: PuppyGraph is already used by half of the top 20 cybersecurity companies, as well as engineering-driven enterprises like AMD and Coinbase. Whether it’s multi-hop security reasoning, asset intelligence, or deep relationship queries across massive datasets, these teams trust PuppyGraph to replace slow ETL pipelines and complex graph stacks with a simpler, faster architecture.

As data grows more complex, the most valuable insights often lie in how entities relate. PuppyGraph brings those insights to the surface, whether you’re modeling organizational networks, social introductions, fraud and cybersecurity graphs, or GraphRAG pipelines that trace knowledge provenance.

Deployment is simple: download the free Docker image, connect PuppyGraph to your existing data stores, define graph schemas, and start querying. PuppyGraph can be deployed via Docker, AWS AMI, GCP Marketplace, or within a VPC or data center for full data control.

Conclusion

In this article, we have compared TigerGraph and JanusGraph across architecture, query languages, performance characteristics, scaling models, and ideal use cases. TigerGraph and JanusGraph embody two distinct approaches: TigerGraph delivers a native, high‑performance graph engine optimized for deep multi‑hop traversals and parallel analytical workloads, while JanusGraph offers an open‑source, pluggable graph stack that emphasizes backend flexibility, Gremlin interoperability, and integration with existing NoSQL and big‑data ecosystems.

Both solutions, however, require provisioning and maintaining a separate graph database, building and operating ETL pipelines, and managing additional storage and governance layers, which can introduce complexity, cost, and operational overhead as datasets grow and use cases diversify. PuppyGraph presents a modern alternative by enabling real‑time, zero‑ETL graph analytics directly on existing relational and lakehouse data stores. It combines scalable multi‑hop traversal performance, distributed compute, flexible metadata‑driven schema modeling, and support for standard graph query languages, all without duplicating data or adding a separate graph storage tier.

For teams seeking high‑performance graph analytics on current data sources, PuppyGraph offers a simpler, faster, and cost‑effective solution. Explore the forever‑free PuppyGraph Developer Edition, or book a demo with our team to see it in action.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install