Mongodb vs Neo4j : Key Differences

MongoDB and Neo4j are both very different types of databases, and deciding which one is the best fit for you means understanding two fundamentally different approaches to data storage and querying. MongoDB is a document database designed for flexible schema management and horizontal scalability across general-purpose workloads. Neo4j is a native graph database built for fast traversal of highly connected data through index-free adjacency.

The performance of each platform depends heavily on access patterns and data structure. MongoDB is a document store that handles document lookups, aggregations, and hierarchical queries efficiently through indexes and its aggregation framework. Neo4j's index-free adjacency keeps traversal cost proportional to the explored subgraph rather than total graph size, making it effective for deep relationship queries like fraud detection, social network analysis, and recommendation engines.

This comparison examines the core technical differences, performance characteristics, and ideal use cases for both MongoDB and Neo4j, along with how zero-ETL graph query engines like PuppyGraph can extend MongoDB's capabilities for relationship-heavy workloads without data duplication.

What is MongoDB?

MongoDB is a document-oriented NoSQL database that stores data in flexible, JSON-like BSON (Binary JavaScript Object Notation) documents. Since 2009, MongoDB has become one of the most widely adopted databases for modern applications requiring schema flexibility and horizontal scalability.

Document-oriented data model

Unlike traditional relational databases, MongoDB organizes data into collections of documents rather than tables of rows. Each document is a BSON object that can contain nested structures, arrays, and varying fields without requiring a predefined schema. You can model one-to-many relationships through embedding (nesting related data) or referencing (storing IDs that point to other documents). Embedding provides better read performance and atomic updates, while referencing reduces duplication.

The flexible schema allows applications to evolve without database migrations. You can add fields, change types, or restructure objects without downtime. MongoDB provides optional schema validation when you need to enforce structure.

Query capabilities and aggregation framework

MongoDB's query language supports filtering, sorting, projection, and complex predicates across nested fields. The query optimizer uses indexes, including compound indexes, multikey indexes on arrays, text search, and geospatial indexes.

The aggregation framework provides pipeline-based data processing with stages for filtering, grouping, sorting, joining collections, and computing values. The $graphLookup stage enables recursive queries for hierarchical relationships like organizational charts or category trees. It supports depth limits, filtering at each hop, and cycle detection, though it's most effective for tree-like relationships rather than arbitrary graph patterns.

Horizontal scalability through sharding

MongoDB scales horizontally through sharding, distributing data across servers based on a shard key. The mongos routing process directs queries to appropriate shards, and config servers maintain metadata. MongoDB automatically balances data across shards as clusters grow.

Choosing an effective shard key is critical: a good key distributes writes evenly and supports common query patterns. Replica sets provide high availability within each shard, with automatic failover if the primary fails.

Indexing and ACID transactions

MongoDB relies on indexes for query performance. Without appropriate indexes, queries scan entire collections. The query planner evaluates available indexes and chooses execution strategies based on estimated cost. The WiredTiger storage engine uses document-level locking, compression, and memory-mapped files.

MongoDB has supported ACID transactions across multiple documents and collections since version 4.0. Transactions use snapshot isolation and can span multiple operations. Single-document operations are always atomic without requiring explicit transactions. Multi-document transactions add coordination overhead and are more expensive when spanning shards.

What is Neo4j?

Neo4j is a graph database that stores relationships as physical pointers between nodes. Each relationship record contains direct references to its source node, target node, and neighboring relationships in a linked list structure. This means traversal cost scales with explored relationships, not total graph size.

In Neo4j's data model, nodes represent entities with multiple labels indicating type. Relationships are directed edges with a single type, connecting nodes. Both can have properties as key-value pairs. Neo4j stores this in specialized files: separate files for nodes, relationships, properties, and labels. Each record type has a fixed size, so Neo4j calculates disk locations directly from record IDs.

Cypher query language and index-free adjacency

Cypher uses ASCII art pattern syntax where (a)-[:KNOWS]->(b) describes nodes connected by a relationship. Queries are declarative: you describe what you want, and the query planner decides execution strategy. Cypher supports aggregations, path finding, and subqueries.

Neo4j's traversal performance comes from direct pointer chasing rather than index lookups. When traversing from node A to node B, Neo4j reads A's relationship pointer, follows it to the relationship record, and jumps to B. Time to traverse N relationships is O(N), regardless of total graph size. This works well for queries exploring local neighborhoods (1-5 hops), but can get expensive for deep traversals touching millions of relationships.

ACID transactions and clustering

Neo4j provides ACID transactions with snapshot isolation and locking. When you modify a node or relationship, Neo4j locks it until commit. If two transactions conflict, one aborts with a deadlock error. This works well for low-contention workloads but can bottleneck under high write concurrency.

Neo4j Enterprise Edition uses a Core + Read Replica architecture. The Core cluster runs Raft consensus. One Core is the leader handling all writes. Read Replicas asynchronously poll Core servers for updates and serve read-only queries. Writes don't scale horizontally: all writes funnel through the single leader. The Community Edition runs on a single instance without clustering.

Ecosystem and schema flexibility

Neo4j has been around since 2007. The ecosystem includes official drivers for major languages (Python, Java, JavaScript, Go, .NET), visualization tools (Neo4j Bloom, Browser), and a desktop IDE. Community tools include APOC (a plugin library), a graph data science library, and connectors for Spark and Kafka.

Neo4j doesn't require schema definitions upfront. You can create nodes with any labels and properties without declaring them first. Add indexes and constraints for query performance or validation. Nodes with the same label can have different property sets.

Core differences between MongoDB and Neo4j

When putting the two databases side-by-side, you can easily see the core differences between different dimensions and features. Here are a few key data points to understand the differences between these two NoSQL databases:

When to use MongoDB

So, based on the differences between Neo4j and MongoDB, when is it best to choose Mongo? Here are a few key areas where Mongo comes out ahead:

Flexible, self-contained documents

Choose MongoDB when your data naturally fits into documents with clear boundaries. Content management systems, product catalogs, user profiles, and configuration stores work well because queries retrieve entire documents or field subsets. The document model matches how developers think about objects in code, reducing impedance mismatch.

MongoDB handles schema evolution gracefully. Add fields, change types, or restructure nested objects without migrations, accelerating development when requirements change frequently or when document types vary within collections.

High-volume operational workloads

MongoDB performs well for operational systems with high transaction rates. It supports thousands of writes per second across sharded clusters and provides low-latency reads through indexes. Use cases include e-commerce platforms, mobile backends, real-time analytics, and IoT data collection.

The aggregation framework handles complex analytical queries without moving data to separate systems. Filter, group, join, and compute aggregates in the database, eliminating external ETL processes or data warehouses for many workloads.

Horizontal scalability and hierarchical relationships

When data volume exceeds single-server capacity, MongoDB's sharding distributes data across servers. Each shard operates independently, so adding capacity means adding servers. This works well for time-series data, multi-tenant applications, and continuously growing datasets.

MongoDB handles parent-child and tree-like relationships effectively through embedding or $graphLookup. Organizational charts, category hierarchies, file systems, and comment threads fit naturally. For hierarchies with known depth, embedding provides the best performance. For deeper hierarchies, $graphLookup supports traversing references with depth limits.

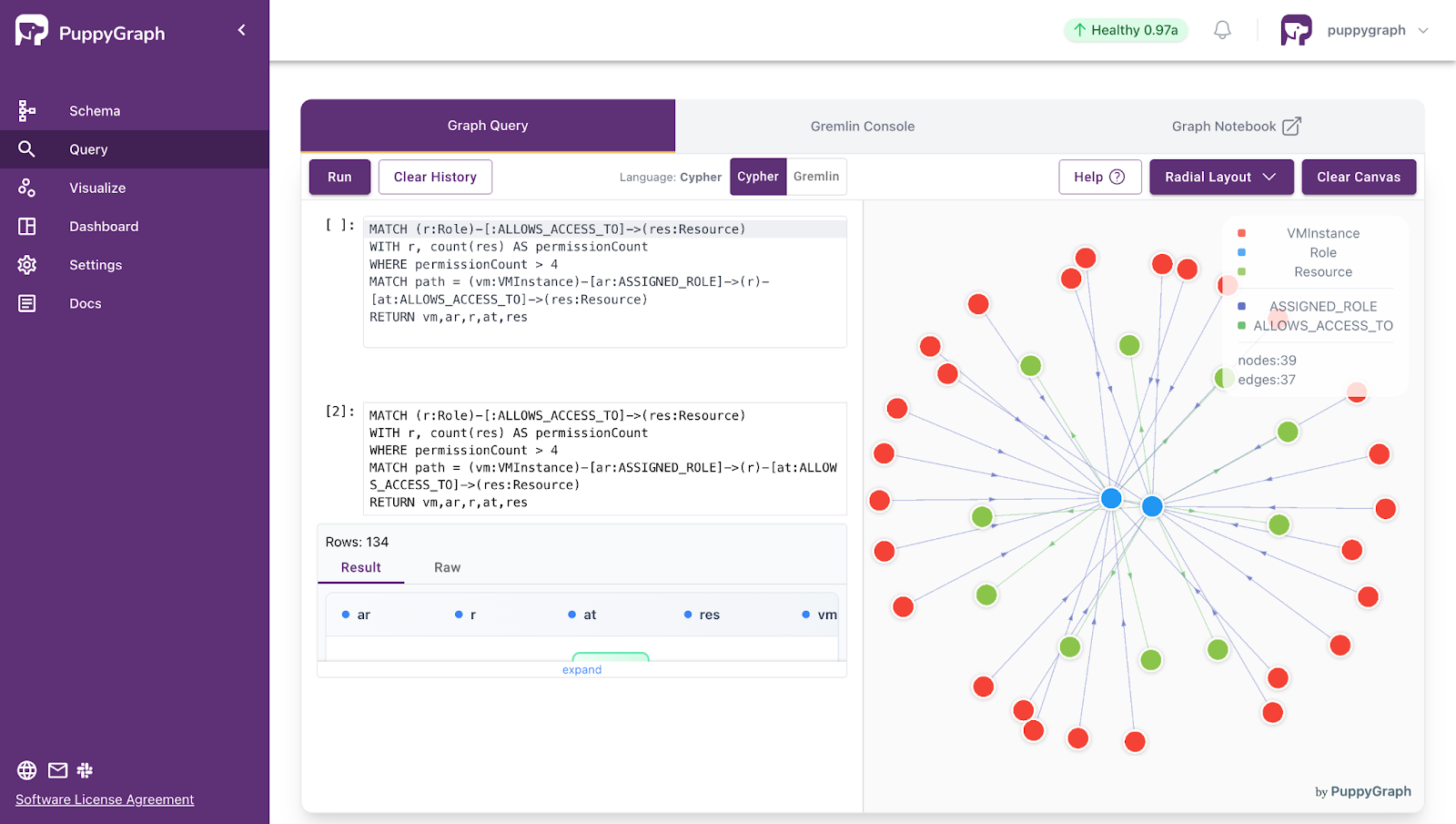

Extending MongoDB with graph capabilities

When relationship-heavy queries become common, PuppyGraph can extend MongoDB with graph capabilities. PuppyGraph connects to MongoDB and creates a virtual graph layer on existing collections, allowing Cypher or Gremlin queries for multi-hop traversals and pattern matching while keeping MongoDB as the primary data store.

This works well when you need both document operations and graph analytics on the same data. MongoDB handles transactional workloads while PuppyGraph enables relationship exploration and graph algorithms without data duplication or ETL pipelines.

When to use Neo4j

On the other hand, here are some of the factors that would point to Neo4j being a better fit, mainly due to it being a native graph database:

Highly connected, relationship-centric data

Choose Neo4j when relationships between entities are as important as the entities themselves. Social networks, fraud detection rings, knowledge graphs, and identity resolution depend on exploring connections rather than retrieving isolated records. Neo4j's index-free adjacency makes traversing connections fast and predictable.

The graph data model naturally represents domains where connections define meaning. Questions like "Who are my second-degree connections?" or "Which users share suspicious transaction patterns?" map directly to graph queries without complex joins.

Deep multi-hop traversals and pattern detection

Neo4j excels at queries exploring 3-10+ hops between nodes. Fraud detection examines transaction chains across accounts. Recommendation engines find paths through user-product-user networks. Network analysis explores connectivity patterns across organizations.

Neo4j's Cypher language supports pattern matching, describing subgraph structures. You can search for triangles, cycles, relationship sequences, or complex motifs. The Graph Data Science library provides algorithms for community detection, centrality analysis, pathfinding, and similarity scoring, answering questions about influence, importance, and connectivity difficult to express in document models.

Real-time operational graphs

Neo4j works best when the graph sits on the critical path of application requests. Millisecond-level response times support real-time fraud detection, personalized recommendations, access control evaluation, and dynamic routing.

The database handles concurrent reads and writes with ACID guarantees, suitable for transactional workloads where data changes frequently. Use cases include session management, real-time eligibility checks, and operational dashboards displaying live network status.

Which is best: MongoDB or Neo4j?

As we can see above, the choice of which to go with depends on your data structure, query patterns, and operational priorities. Neither is universally better, but each excels at different workloads. To take the above points about when each platform is the best fit and make it simple, here is where both make sense:

Choose MongoDB when

Select MongoDB when data naturally organizes into self-contained documents:

- Document-centric applications: Content management, product catalogs, and user profiles, where operations retrieve or update entire documents

- Flexible schema requirements: Rapid development cycles, evolving data models, varying document structures

- High write throughput: Distributed writes across sharded clusters for continuous data ingestion

- Hierarchical relationships: Organizational charts, category trees, or nested structures fitting documents or $graphLookup queries

- Operational scale: Systems requiring horizontal scalability, geographic distribution, or multi-tenant isolation

Choose Neo4j when

Select Neo4j when relationships drive core application functionality:

- Relationship-centric queries: Social networks, fraud detection, recommendation engines, where connections matter more than individual records

- Deep traversals: Questions exploring 3-10+ hops through complex data relationships: finding influence paths, detecting circular references, tracing supply chains

- Pattern matching: Detecting relationship structures like fraud rings, attack patterns, and collaboration networks

- Graph algorithms: Computing centrality, community detection, shortest paths, or similarity scores

- Real-time graph operations: Applications requiring sub-millisecond to low-millisecond response times for multi-hop queries

Why consider PuppyGraph as an alternative

Both MongoDB and Neo4j require maintaining separate infrastructure and synchronizing data. MongoDB handles documents well, but struggles with deep relationship queries. Neo4j excels at graph workloads but requires extracting data from other systems.

PuppyGraph is the first and only real-time, zero-ETL graph query engine in the market, empowering data teams to query existing relational data stores as a unified graph model that can be deployed in under 10 minutes, bypassing traditional graph databases' cost, latency, and maintenance hurdles.

It seamlessly integrates with data lakes like Apache Iceberg, Apache Hudi, and Delta Lake, as well as databases including MySQL, PostgreSQL, and DuckDB, so you can query across multiple sources simultaneously.

Key PuppyGraph capabilities include:

- Zero ETL: PuppyGraph runs as a query engine on your existing relational databases and lakes. Skip pipeline builds, reduce fragility, and start querying as a graph in minutes.

- No Data Duplication: Query your data in place, eliminating the need to copy large datasets into a separate graph database. This ensures data consistency and leverages existing data access controls.

- Real Time Analysis: By querying live source data, analyses reflect the current state of the environment, mitigating the problem of relying on static, potentially outdated graph snapshots. PuppyGraph users report 6-hop queries across billions of edges in less than 3 seconds.

- Scalable Performance: PuppyGraph’s distributed compute engine scales with your cluster size. Run petabyte-scale workloads and deep traversals like 10-hop neighbors, and get answers back in seconds. This exceptional query performance is achieved through the use of parallel processing and vectorized evaluation technology.

- Best of SQL and Graph: Because PuppyGraph queries your data in place, teams can use their existing SQL engines for tabular workloads and PuppyGraph for relationship-heavy analysis, all on the same source tables. No need to force every use case through a graph database or retrain teams on a new query language.

- Lower Total Cost of Ownership: Graph databases make you pay twice — once for pipelines, duplicated storage, and parallel governance, and again for the high-memory hardware needed to make them fast. PuppyGraph removes both costs by querying your lake directly with zero ETL and no second system to maintain. No massive RAM bills, no duplicated ACLs, and no extra infrastructure to secure.

- Flexible and Iterative Modeling: Using metadata-driven schemas allows creating multiple graph views from the same underlying data. Models can be iterated upon quickly without rebuilding data pipelines, supporting agile analysis workflows.

- Standard Querying and Visualization: Support for standard graph query languages (openCypher, Gremlin) and integrated visualization tools helps analysts explore relationships intuitively and effectively.

- Proven at Enterprise Scale: PuppyGraph is already used by half of the top 20 cybersecurity companies, as well as engineering-driven enterprises like AMD and Coinbase. Whether it’s multi-hop security reasoning, asset intelligence, or deep relationship queries across massive datasets, these teams trust PuppyGraph to replace slow ETL pipelines and complex graph stacks with a simpler, faster architecture.

As data grows more complex, the most valuable insights often lie in how entities relate. PuppyGraph brings those insights to the surface, whether you’re modeling organizational networks, social introductions, fraud and cybersecurity graphs, or GraphRAG pipelines that trace knowledge provenance.

Deployment is simple: download the free Docker image, connect PuppyGraph to your existing data stores, define graph schemas, and start querying. PuppyGraph can be deployed via Docker, AWS AMI, GCP Marketplace, or within a VPC or data center for full data control.

Conclusion

Both of these popular database management systems represent very different approaches to data management. MongoDB's document model provides flexibility, horizontal scalability, and developer productivity for general-purpose applications. Neo4j's graph architecture delivers fast, predictable traversal performance for relationship-centric workloads.

The right choice depends on whether queries primarily retrieve documents or explore connections. MongoDB fits systems organized around discrete records. Neo4j fits systems where relationships define meaning and multi-hop traversals drive application logic.

For teams already using MongoDB who need graph capabilities, PuppyGraph provides a simpler path forward. Rather than maintaining separate systems and ETL pipelines, PuppyGraph adds graph querying directly to existing MongoDB deployments without data duplication.

To explore how PuppyGraph can extend your MongoDB deployment with graph capabilities, download PuppyGraph's forever-free Developer edition or book a demo to see it in action.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install