What is SQL Graph? How Does It Work?

Enterprises increasingly face data problems defined by relationships, including fraud networks, supply chains, and customer influence. Yet, most analytics continue to run on traditional relational databases. Many argue that unlocking these patterns requires native graph databases with specialized engines and query languages. In reality, relational databases have evolved to model and query graph structures, allowing organizations to run meaningful graph analytics without retiring their existing infrastructure. By combining well-known relational techniques with modern SQL features, enterprises can uncover multi-hop connections, perform relationship analysis, and answer graph-centric questions. All of this is possible while preserving governance, compliance, and operational continuity.

Can SQL Databases Do Graphs?

The answer is yes. SQL databases can represent and analyze graph structures, despite them being originally designed for tabular, relational data.

Graphs are just collections of entities (nodes) and relationships (edges). In relational terms, these map naturally to tables: one table for entities, one for relationships, and possibly additional tables for attributes. This means you can model graph data directly in any relational database without changing the underlying engine.

What enables graph workloads in SQL is not a separate “graph mode,” but rather the ability to store relationships explicitly and query them flexibly. For decades, developers have implemented graph-like queries in SQL using joins, linking a table of relationships back to itself to traverse multiple hops. Over time, language features such as recursive common table expressions (CTEs) have made these traversals more efficient and easier to write, making possible multi-level pathfinding, hierarchy exploration, and connection analysis directly in SQL.

However, this capability comes with caveats. SQL's relational model and declarative language were not designed for the inherent complexity of graph patterns. Expressing even moderately complex graph traversals, such as finding the shortest path through a dense network, identifying communities, or calculating centrality measures……results in extremely complicated, nested, and often obscure SQL queries.

Furthermore, SQL engines lack the native storage optimizations (like index-free adjacency) and execution engines tailored for high-performance graph pattern matching found in dedicated graph databases (Neo4j, Amazon Neptune, etc.). Performance for deep or wide traversals often degrades significantly compared to purpose-built systems.

That said, SQL databases have limitations for advanced graph workloads. But for many enterprise workloads, graphs constitute a subset of a broader relational model, where SQL offers more than enough capability. You can design your schema so relationships have explicit and indexed definitions, then use SQL’s set-based operations to navigate them.

So, SQL databases can handle graph workloads when the goal is to express relationships, run multi-hop queries, and integrate results into existing BI or transactional systems, without the operational overhead of introducing a separate graph database.

Architectural Patterns for Graphs in SQL

Relational databases inherently store graph structures through their basic design, without special extensions or syntax. Every table row represents potential nodes, while relationships between tables form implicit edges.

Suppose we have two tables in a database, one for customers and the other for orders. Orders table might contain information like price, amount, who made this order. If the orders table looks like order(id, price, amount, customer_id), then the customer_id column in the orders table can be interpreted as a directed edge in graph terms.

One interpretation is that, every foreign key is an implicit edge, the graph structure is already equipped in the relational database. Theoretically, we can query the hidden graph using nested JOINs. Anyway, it’s more convenient to define explicit edge tables, making edges first-class entities. And to accelerate multi-hop analysis, you can even store precomputed paths as tables.

The fundamental truth is, all relational data contains latent graph structures. Foreign keys define implicit edges, junction tables materialize many-to-many relationships, and hierarchical data (like categories) exists as parent-child links. In principle, these can be queried as graphs using standard SQL joins.

Modeling Graphs in Relational Databases

As mentioned above, SQL tables can effectively represent nodes, edges, and relationships. To illustrate, consider a simple social video network. Here, users (nodes) interact with videos (nodes) through relationships such as uploading, liking, or following other users. This can be systematically modeled by creating discrete tables for core entities, and dedicated junction tables to capture relationships.

Defining Node Tables

In graph analytics, nodes represent entities—the "things" in your system. When modeling graphs in relational databases, these nodes become standard SQL tables. For our social video network example, we have two core entity types that will serve as graph nodes.

- Users

CREATE TABLE users (

id INT PRIMARY KEY,

name VARCHAR(50) NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

- Videos

CREATE TABLE videos (

id INT PRIMARY KEY,

title VARCHAR(100) NOT NULL,

duration_seconds INT NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

Defining Edge Tables

An edge table models the relationship between two nodes. Unlike node tables (which store entity attributes), edge tables exclusively capture connections. We ensure graph integrity through foreign key constraints.

For our example, users can follow other users, comment on or like videos. We only exhibit two relations here:

- Follows (user-to-user) :

CREATE TABLE follows (

follower_id INT NOT NULL,

followee_id INT NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (follower_id, followee_id),

FOREIGN KEY (follower_id) REFERENCES users(id) ON DELETE CASCADE,

FOREIGN KEY (followee_id) REFERENCES users(id) ON DELETE CASCADE

);

- Likes (user-to-video)

CREATE TABLE likes (

user_id INT NOT NULL,

video_id INT NOT NULL,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (user_id, video_id),

FOREIGN KEY (user_id) REFERENCES users(id) ON DELETE CASCADE,

FOREIGN KEY (video_id) REFERENCES videos(id) ON DELETE CASCADE

);

Populate with Sample Data

Having defined our node and edge tables, we now validate the schema by populating it with realistic sample data.

- Insert Node Entities (Users and Videos)

INSERT INTO users (id, name) VALUES

(1, 'Alice'),

(2, 'Bob'),

(3, 'Charlie');

INSERT INTO videos (id, title, duration_seconds) VALUES

(101, 'SQL for Graph', 680),

(102, 'Weekend Hiking', 1245);

- Insert Edge Relationships (Follows and Likes)

INSERT INTO follows (follower_id, followee_id) VALUES (1, 2);

INSERT INTO likes (user_id, video_id) VALUES (3, 101);

Thus we put the whole graph into SQL resources.

Querying Graph Structures with SQL

Though limited, native SQL has graph abilities.

Single-hop Queries with Joins

For one-hop relationships, a simple join between the edge table and the node table suffices. In our example, finding who follows Bob (suppose Bob’s id is 2) is as follows:

SELECT u.name

FROM follows f

JOIN users u ON f.follower_id = u.id

WHERE f.followee_id = 2;

Multi-hop Traversals in native SQL

Two-hop relationships are also common in graph analytics, and we might be interested in “which videos do Bob’s followers like”. Following is the SQL query (suppose Bob’s id is 2):

SELECT DISTINCT v.title AS video_title

FROM follows f

JOIN likes l ON f.follower_id = l.user_id

JOIN videos v ON l.video_id = v.id

WHERE f.followee_id = 2;

Analysis on two-hop or even more number of hops can be fundamental to recommendation algorithms, which video platforms heavily rely on to surface relevant content.

Multi-hop Traversals with CTEs

At first glance, native SQL is well-suited for straightforward two-hop queries, like the initial example retrieving videos liked by users who follow a specific account. Common Table Expressions (CTEs) deliver decisive advantages in complex scenarios.

CTEs transform intricate logic into modular, self-documenting steps (e.g., isolating user preferences, identifying overlapping likers, and filtering novel recommendations), dramatically improving readability and maintainability.

Suppose we want to recommend videos to Alice. A possible strategy is, identifying the videos she has liked, and then analyzing the viewing preferences of users who share those interests to uncover additional videos they also enjoy.

Let’s see how to write such query with CTEs:

- Find out the videos Alice (suppose Alice’s id is 1) has liked

WITH user1_likes AS (

SELECT video_id

FROM likes

WHERE user_id = 1

),

- Find out users with similar interests:

other_users AS (

SELECT DISTINCT l.user_id

FROM likes l

JOIN user1_likes u1l ON l.video_id = u1l.video_id

WHERE l.user_id <> 1

),

- List videos those users like and recommend them to Alice:

other_users_likes AS (

SELECT DISTINCT l.video_id

FROM likes l

JOIN other_users ou ON l.user_id = ou.user_id

WHERE l.video_id NOT IN (SELECT video_id FROM user1_likes)

)

SELECT DISTINCT v.title AS video_title

FROM other_users_likes oul

JOIN videos v ON oul.video_id = v.id;In this example, native SQL can become error-prone and opaque. While CTEs enable single-point modifications, eliminate redundant subqueries, and support seamless extensions—turning brittle scripts into scalable, engineer-friendly data pipelines.

Growing Graph Capabilities in SQL

While SQL has long dominated relational data management, its native limitations in handling graph-like relationships, such as multi-hop traversals for recommendations or fraud detection, are increasingly apparent.

Traditional approaches using nested JOINs quickly become unwieldy for complex patterns, as seen in basic two-hop queries (e.g., find videos liked by users who follow Bob). Here, Common Table Expressions (CTEs) emerge as a pivotal evolution, transforming opaque nested logic into modular, readable workflows.

By decomposing problems into named steps like isolating a user’s preferences (user1_likes), identifying overlapping likers (other_users), and filtering novel recommendations (other_users_likes)—CTEs turn brittle scripts into maintainable data pipelines.

CTEs and recursive CTEs were first formally introduced in the SQL:1999 standard, marking a significant leap in SQL’s ability to handle hierarchical and graph-like data. Recursive CTEs unlock arbitrary-depth graph traversal, enable elegant solutions for problems like "find all users within three degrees of connection". Developers could express multi-hop queries in a single statement, yet this demands intricate boilerplate, limiting accessibility.

As demand for graph analytics grows significantly, native graph databases like Neo4j appear, and mainstream relational database systems are catching up by integrating built-in or extensible graph processing capabilities.

- PostgreSQL supports graph analytics through Apache AGE (Apache Graph Engine)

- SQL Server introduced native graph support starting with SQL Server 2017

- Oracle Database offers graph analytics through Oracle Graph, supporting PGQL (Property Graph Query Language)

How SQL systems have been evolving nowadays for graph analytics is less adding brand-new syntax and more about improving performance and usability. That means smarter optimizers, better indexing strategies, and tighter integration with analytics tools. Enterprises can now address many graph analysis needs directly within their relational systems, delaying or avoiding the need for specialized graph databases.

Graph Capabilities Across Popular SQL Databases

While SQL’s core language is standardized, each major relational database implements graph-related features differently. These differences can significantly impact how you design, query, and optimize graph workloads.

PostgreSQL

PostgreSQL enhances its analytical capabilities through Apache AGE (Apache Graph Engine), an open-source extension that integrates graph processing into the relational database. AGE enables native execution of Cypher-like queries (the declarative language popularized by Neo4j), allowing users to model complex relationships using standard SQL tables as nodes and edges. Without requiring data migration, it supports pattern matching, path traversal, and graph algorithms directly within PostgreSQL, leveraging its robust transactional foundation and ecosystem (e.g., JSONB, PostGIS).

This integration lets developers build interconnected data applications—like fraud detection or recommendation systems—using familiar SQL workflows while unlocking graph-specific power. AGE transforms PostgreSQL into a versatile multi-model database, bridging relational and graph paradigms efficiently and cost-effectively.

SQL Server

SQL Server delivers native graph database capabilities directly within its relational engine, introduced in SQL Server 2017. It extends T-SQL with specialized NODE and EDGE table types to model entities and relationships, enabling intuitive pattern-matching queries via the MATCH() clause. This allows seamless traversal of complex hierarchies, such as organizational charts or supply chains, using familiar SQL syntax without requiring external tools or data migration.

Integrated into the Microsoft data ecosystem, SQL Server’s graph features interoperate with Power BI for visualization and Azure Synapse for advanced analytics. While less expressive than dedicated graph query languages, its tight coupling with enterprise-grade transactional processing makes it capable for operational graph use cases within existing SQL Server deployments.

Oracle Database

Oracle Database provides robust native graph analytics through Oracle Graph, a high-performance engine integrated into the database since Oracle Database 18c. It supports property graphs and the Property Graph Query Language (PGQL), a SQL-inspired, Cypher-like syntax enabling intuitive pattern matching for complex relationship analysis. Users can execute basic graph queries directly within SQL, leveraging Oracle’s transactional integrity and scalability.

Beyond querying, Oracle Graph includes in-memory processing for advanced algorithms (PageRank, shortest path, community detection) and integrates with Python via the Graph Server. Ideal for enterprise applications, it transforms relational data into actionable graph insights without requiring separate infrastructure.

MySQL

MySQL offers limited graph capabilities primarily through recursive CTEs, introduced in MySQL 8.0. These allow hierarchical queries for tree-like structures, such as organizational charts or category taxonomies, using iterative self-joins to traverse parent-child relationships.

However, MySQL lacks native graph modeling (nodes/edges), pattern-matching syntax, or graph algorithms. To perform advanced graph analytics, data should be ETL'd from MySQL to specialized graph databases such as Neo4j.

Notable Mentions

Other enterprise RDBMSs like IBM Db2 also support recursive queries and, in some cases, dedicated graph processing engines tightly coupled with the relational core. While these systems often provide richer built-in algorithms, they still follow the same fundamental modeling principles as other SQL databases when it comes to representing nodes and edges.

Your choice of database doesn’t change the underlying graph modeling approach, but it can influence query design, indexing options, and available optimizations. Leveraging vendor-specific features where they align with your workload can simplify development, while sticking to portable SQL constructs ensures your implementation remains migration-friendly.

Benefits of Doing Graph Analytics in SQL Systems

While native graph databases are now widely available, practical considerations often justify conducting graph analysis directly within relational databases.

Proximity to Data

For many enterprises, proximity to data is the most compelling reason to run graph analytics in SQL. If your graph data already lives in a relational database, querying it in place avoids the time, complexity, and risk of moving it into a separate graph system. This not only reduces operational overhead but also provides near real-time insights without additional pipelines.

Cost Efficiency

Cost efficiency comes naturally. You can reuse existing database infrastructure, like hardware, licenses, monitoring, and backup systems. You don’t need to budget for and maintain a parallel graph database stack. The same applies to skills: SQL is widely understood in enterprise IT, meaning analysts, developers, and DBAs can work on graph workloads without learning a new query language or database platform.

Enterprise-grade Performance and Features

Relational systems also bring mature capabilities that graph-native engines often lack in enterprise deployments, including ACID compliance, granular access control, and integration with governance tools. In conjunction with well-chosen schema patterns and engine-level optimizations, like advanced indexing, clustering, and partitioning, SQL-based graph analytics can handle a surprising range of workloads efficiently.

Ecosystem Fit

This approach fits neatly into the existing analytics and compliance ecosystem. Graph queries can feed into BI dashboards, compliance reports, and auditing processes without intermediate data movement, giving both speed and traceability. For many organizations, this blend of flexibility, security, and integration is reason enough to explore graph analytics without leaving the relational world.

Limitations and Considerations

Running graph analytics in SQL and relational databases has practical boundaries that architects and data teams should evaluate before committing to this approach.

Traversal Performance

The most significant consideration is performance on deep or high fan-out traversals. While recursive CTEs and well-indexed edge tables work for moderate workloads, queries exploring multiple hops in large, dense graphs can generate explosive intermediate result sets. This can result in long execution times, high memory consumption, and unexpected strain on shared database resources.

Availability of Graph Algorithms

Native graph databases often include shortest-path, centrality, community detection, and other algorithms out-of-the-box. In SQL, these must be implemented manually, approximated with recursive queries, or run externally in an analytics environment. This increases development time and can make some advanced analytics impractical in production.

Schema Flexibility

Adding new node or edge types, or changing relationship structures, typically requires schema changes and possibly index redesigns. While JSON or array columns can soften this constraint, overuse of flexible data types can degrade performance and complicate indexing strategies.

Resource Contention

Resource contention is also an important consideration for enterprises. In a shared database, graph queries often compete with transactional workloads, risking slowdowns for critical applications. To counter this, there might be isolation strategies, like running analytics on a replica or in a dedicated instance. That further adds operational complexity.

Live Data Risks

While running graph analytics in place avoids ETL complexity, it also means querying live operational data. This raises questions about locking, read consistency, and compliance requirements for long-running analytics queries.

SQL vs Native Graph Databases in the Enterprise

SQL-based graph analytics makes the most sense when graph queries constitute a subset of a broader relational workload. Staying in SQL avoids the cost and complexity of a new platform if:

- You have most of your data already in relational tables

- Your graph use cases involve moderate traversal depth, predictable patterns, and integration with existing BI or compliance workflows

The familiar query language, mature security controls, and transactional integrity are compelling advantages, especially when graph queries must run alongside other relational queries in the same environment.

Native graph databases shine when the workload involves deep, irregular, or algorithm-heavy traversals. Use cases like real-time recommendations, fraud detection across massive transaction networks, or supply chain optimization across millions of interconnected entities benefit from graph-optimized storage and query execution engines.

On the other hand, native graph databases carry significant scaling challenges. The interconnected nature of graph data means that adding more hardware does not always translate to linear performance improvements. It can take hours to run multi-hop queries and struggle beyond 100GB of data.

From an operational perspective, the decision also involves data movement and governance. Introducing a graph database means creating new pipelines for ingest, synchronization, and security. In many enterprises, the trade-off between performance gains and the added operational complexity is what slows or stops native graph adoption.

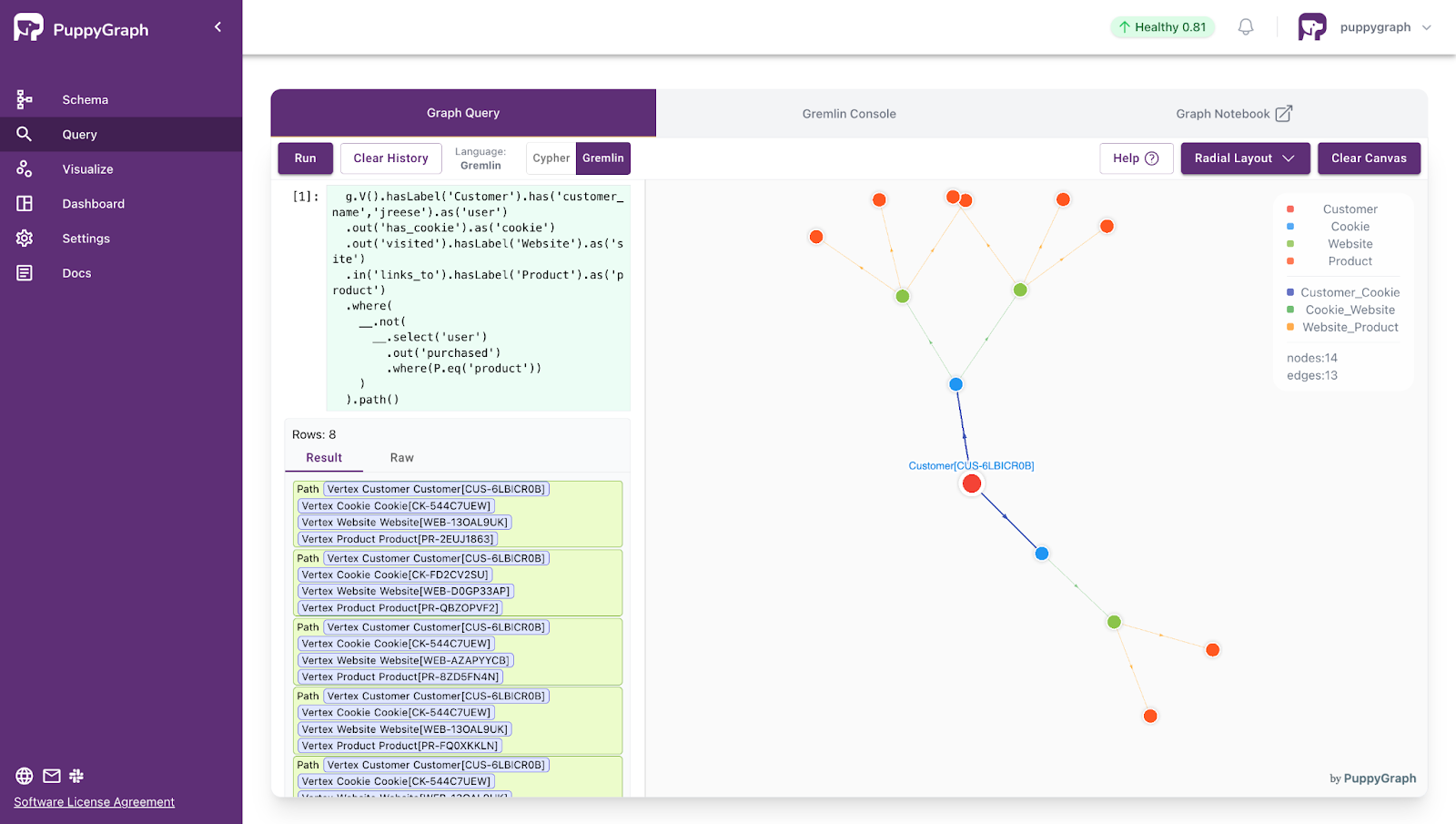

PuppyGraph: Query Relational Data as Graph

PuppyGraph is the first real-time, zero-ETL graph query engine. It lets data teams query existing relational stores as a single graph and get up and running in under 10 minutes, avoiding the cost, latency, and maintenance of a separate graph database. PuppyGraph is not a traditional graph database but a graph query engine designed to run directly on top of your existing data infrastructure without costly and complex ETL (Extract, Transform, Load) processes. This "zero-ETL" approach is its core differentiator, allowing you to query relational data in data warehouses, data lakes, and databases as a unified graph model in minutes.

Instead of migrating data into a specialized store, PuppyGraph connects to sources including PostgreSQL, Apache Iceberg, Delta Lake, BigQuery, and others, then builds a virtual graph layer over them. Graph models are defined through simple JSON schema files, making it easy to update, version, or switch graph views without touching the underlying data.

This approach aligns with the broader shift in modern data stacks to separate compute from storage. You keep data where it belongs and scale query power independently, which supports petabyte-level workloads without duplicating data or managing fragile pipelines.

PuppyGraph also helps to cut costs. Our pricing is usage based, so you only pay for the queries you run. There is no second storage layer to fund, and data stays in place under your existing governance. With fewer pipelines to build, monitor, and backfill, day-to-day maintenance drops along with your bill.

PuppyGraph also supports Gremlin and openCypher, two expressive graph query languages ideal for modeling user behavior. Pattern matching, path finding, and grouping sequences become straightforward. These types of questions are difficult to express in SQL, but natural to ask in a graph.

As data grows more complex, the teams that win ask deeper questions faster. PuppyGraph fits that need. It powers cybersecurity use cases like attack path tracing and lateral movement, observability work like service dependency and blast-radius analysis, fraud scenarios like ring detection and shared-device checks, and GraphRAG pipelines that fetch neighborhoods, citations, and provenance. If you run interactive dashboards or APIs with complex multi-hop queries, PuppyGraph serves results in real time.

Getting started is quick. Most teams go from deploy to query in minutes. You can run PuppyGraph with Docker, AWS AMI, GCP Marketplace, or deploy it inside your VPC for full control.

Conclusion

Relational databases can already reveal the hidden value in your connected data, if you know how to model, query, and analyze it effectively. But it will often force a difficult choice: accept limitations or invest in a separate graph database with all the data movement and governance overhead that comes with it.

PuppyGraph aims to free enterprises from having to choose between agility and capability. It lets you run high-performance graph queries directly on your existing relational data, eliminating ETL and avoiding disruptive migrations. You can meet governance requirements, control infrastructure costs, and keep security policies intact, while still getting graph-native speed and flexibility.

Welcome to try out PuppyGraph today via our forever-free Developer Edition or book a demo with our team.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install