Stardog vs Neo4j: Key Differences

Modern systems lean heavily on relationships. APIs depend on identity graphs, data platforms depend on lineage, and analytics depend on how entities connect across silos. As a result, graph technology has moved from a niche tool to core infrastructure. But not all graph systems solve the same problem; treating them as interchangeable only precipitates costly mistakes.

This article looks at two graph platforms: Stardog and Neo4j. We discuss the fundamentals: data models, query execution, consistency, and operational posture. In the end, you will gain sufficient understanding about each system before you commit to a graph strategy. We will also demonstrate why PuppyGraph provides a modern, future-proof alternative for building graph-based systems.

What Is Stardog?

Stardog is an enterprise knowledge graph platform for organizations needing a semantic layer across multiple data systems. Stardog uses RDF as the data model and SPARQL as the query language, which makes it well-suited for scenarios where meaning, schema, and inference have as much priority as connectivity. If you want to adopt Stardog, you likely have data that reside in many places, follows inconsistent schemas, and requires consolidation under a shared conceptual model.

Architecturally, you will see Stardog less as a general-purpose graph database and more as a semantic integration and reasoning platform. The product emphasizes standards compliance, ontology-driven modeling, and governance features that appeal to larger organizations with complex data estates.

Key Features

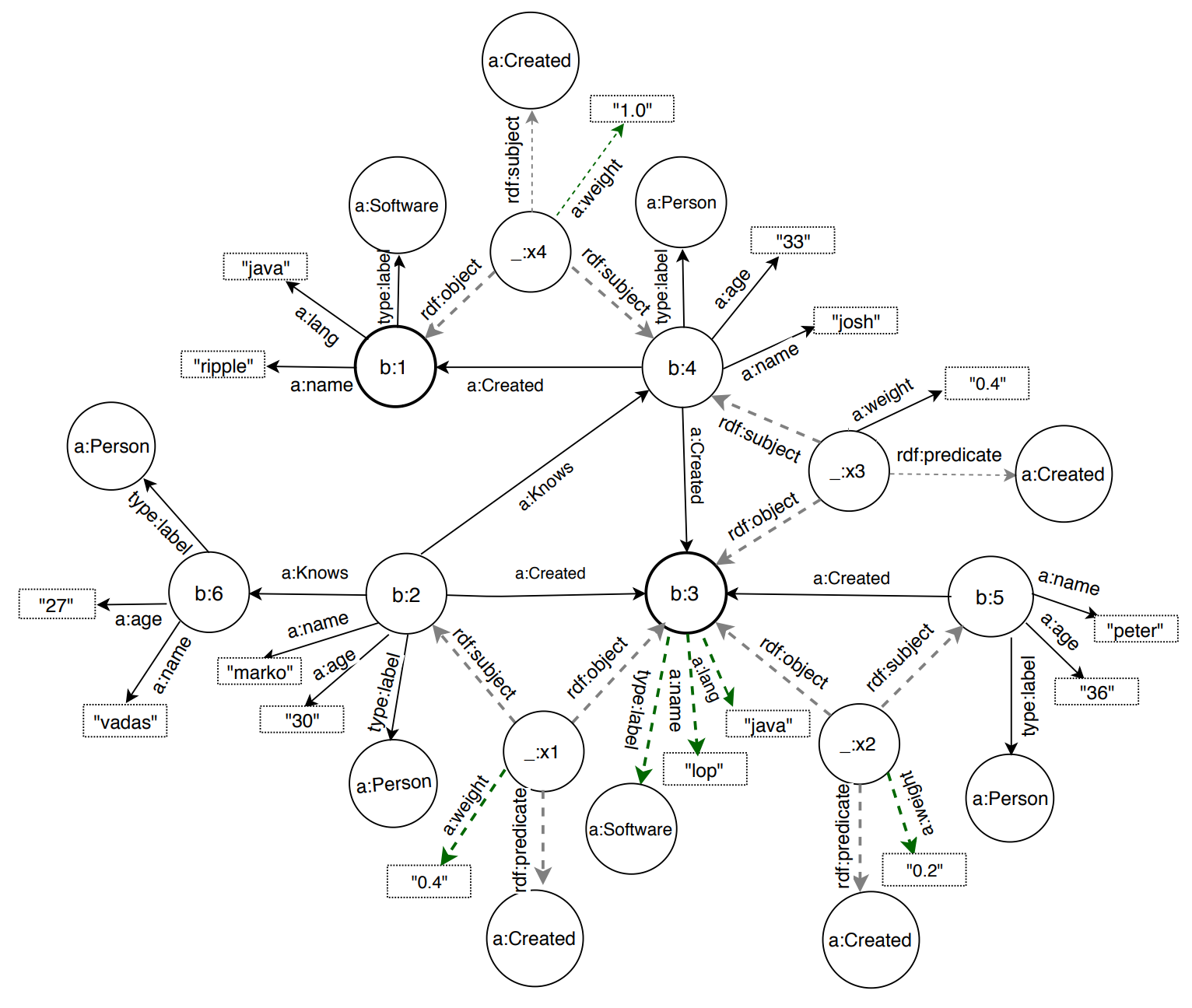

RDF-based knowledge graph architecture

Stardog stores data as RDF triples and quads, representing facts as subject–predicate–object statements with optional graph context. This model supports explicit representation of entities, relationships, and metadata. Data persists in a form compatible with standard RDF tooling and specifications.

SPARQL 1.1 query and update support

Stardog supports the SPARQL 1.1 specification for querying, inserting, updating, and deleting RDF data. Queries operate over graph patterns rather than imperative traversals. The platform also supports SPARQL-based federation and update operations as part of its core query engine.

Ontology management and reasoning

Stardog includes a reasoning engine that applies RDFS and OWL semantics to RDF data. It can materialize inferred triples or compute inferences at query time, depending on configuration. The platform also supports user-defined rules expressed over RDF graphs.

Virtual Graphs for external data access

Stardog supports Virtual Graphs, which expose external data sources through an RDF mapping layer. These virtual graphs allow SPARQL queries to operate over non-RDF systems without requiring full data ingestion. Mappings define how source schemas translate into RDF predicates and entities.

Transaction support and consistency

Stardog supports ACID transactions for RDF data operations. Write operations execute within transactional boundaries, and queries operate against consistent snapshots of the graph. This behavior applies to both data updates and schema changes.

Security and access control

Stardog includes a role-based security model for managing access to databases, graphs, and operations. Permissions associate with roles, and roles assign to users. Security policies apply consistently across query execution and administrative actions.

Administrative and integration interfaces

Stardog exposes administrative capabilities through APIs and a command-line interface. These interfaces support database management, query execution, configuration, and monitoring. The platform also provides client libraries and HTTP endpoints for integrating applications.

What is Neo4j?

Neo4j is a native property graph database for transactional workloads and graph traversals. It stores data as nodes and relationships with properties attached to both, and it exposes this model through the Cypher query language. Neo4j treats the graph as the primary storage abstraction instead of a layer on top of another data model.

Key Features

Native property graph storage

Neo4j implements a native property graph model where nodes and relationships are first-class citizens. Relationships are stored with direct pointers between nodes, which allows constant-time traversals regardless of graph size. Properties attach directly to nodes and relationships, avoiding joins or indirection layers during query execution.

Cypher query language

Neo4j uses Cypher as its declarative query language that can express graph patterns explicitly and intuitively, making relationships central to query structure. Queries operate as pattern matches rather than set-based joins, which aligns closely with traversal-heavy workloads and application-driven access patterns.

ACID transactions and transactional consistency

Neo4j supports fully ACID-compliant transactions. Write operations execute within transactional boundaries and become visible only after commit. This applies consistently across single-instance and clustered deployments, providing predictable behavior for concurrent reads and writes.

Cluster architecture

Neo4j Enterprise Edition supports clustering for availability and read scalability. Servers host databases in either primary or secondary mode, and a single server can host multiple databases in different modes simultaneously.

Primaries accept both reads and writes and replicate transactions using the Raft consensus protocol. Only one primary acts as the writer for a given database at any time, while other primaries replicate synchronously. A database requires a majority of primaries (N/2 + 1) to acknowledge a transaction before commit.

Secondaries replicate data asynchronously from primaries through transaction log shipping. They execute read-only workloads and act as scale-out replicas. Losing a secondary does not affect database availability, only aggregate read throughput.

Neo4j supports causal consistency to ensure read-after-write semantics. Clients can request a bookmark after a write transaction and pass it to subsequent reads. The cluster then routes those reads to servers that have processed the referenced transaction, making sure the client observes its own writes even when reads execute on secondary servers.

Indexing and Querying

Neo4j uses schema indexes and constraints defined on labels and properties to support efficient lookups and enforce data integrity. Constraints include uniqueness and existence rules that apply at write time. Indexes integrate directly with the Cypher query planner and influence plan selection during execution.

Query performance depends heavily on memory configuration. Neo4j separates memory usage between these two:

- Page cache: stores graph data and indexes

- The JVM heap: supports query planning, execution, and transaction state

The platform provides tooling like neo4j-admin memrec to recommend memory sizing based on available hardware.

Cypher supports advanced graph operations, including pattern comprehensions, variable-length path matching, shortest path queries, and subqueries. These operators allow expressive graph queries to run within the transactional engine, without relying on external processing layers.

Security and Access Control

Neo4j’s security capabilities vary by edition. The Community Edition supports basic authentication, while the Enterprise Edition adds role-based access control (RBAC). RBAC allows administrators to define permissions at the level of databases, labels, relationship types, and procedures.

Enterprise deployments can integrate with external identity systems like LDAP, Active Directory, and Kerberos. Neo4j also supports encrypted communication using TLS and provides configuration options for securing endpoints, enforcing authentication, and auditing access. Neo4j publishes security guidance to support hardened production deployments.

Operations and Tooling

Neo4j provides distinct tooling for bulk initialization and ongoing data updates. The neo4j-admin import utility supports high-throughput CSV ingestion but runs offline and requires exclusive access to the database. For incremental ingestion, Cypher’s LOAD CSV command enables data updates while the database remains online.

Backup and recovery capabilities depend on edition. Neo4j Enterprise Edition supports online and differential backups, while Community Edition relies on offline file-based backups. For monitoring, Neo4j exposes operational metrics through JMX and Prometheus-compatible exporters, covering areas such as cache utilization, query execution, and JVM performance.

Stardog vs Neo4j: Architectural Comparison

The following table illustrates the architecture-adjacent properties of Stardog and Neo4j across data models, storage, query execution, consistency, and clustering mechanics.

When to Use Stardog

Semantic correctness and reasoning at the database layer

Use Stardog when your system requires formal semantics that the database itself enforces. Stardog applies RDFS and OWL reasoning directly over stored and virtualized data; so inferred facts participate in queries and validation. As a result, the data layer, not application logic, contrives semantic enforcement.

Neo4j can model hierarchies and relationships, but it does not include a native semantic reasoning engine. Queries, procedures, or upstream processing must have any inference-like behavior encoded into them. Although it works for many applications, it does not provide ontology-level guarantees as the graph evolves.

Knowledge graphs built on shared or external standards

Stardog fits architectures that depend on W3C standards like RDF, OWL, and SPARQL. These standards enable portability across tools, alignment with public vocabularies, and interoperability with external datasets. It is substantial in environments where data models must remain stable across vendors, teams, or long time horizons.

Neo4j uses a proprietary property graph model and Cypher. While expressive and efficient, the model relies on internal identifiers and conventions rather than globally defined IRIs. That variance becomes observable when graphs need to interoperate past a single platform or organization.

Integrating heterogeneous data without enforcing a single schema

Stardog works well when data originates from multiple systems with overlapping or inconsistent schemas. RDF’s triple model and ontology alignment allow entities from different sources to coexist and reconcile over time. Stardog’s reasoning layer can then reconcile differences using explicit semantic rules.

Neo4j expects stronger agreement on labels, relationship types, and property conventions. That assumption aligns with application-owned graphs, but it requires a more restrained coordination when data comprises many upstream systems.

Querying across systems using federation and virtualization

Stardog supports SPARQL-based federation and data virtualization through Virtual Graphs. It allows queries to span local RDF data and external sources as part of a single logical query plan. The database remains aware of schema and semantics even when data has not fully turned up.

Neo4j supports clustering and multi-database routing, but it does not provide protocol-level federation across external graph or non-graph systems. Cross-system queries typically require ingestion or application-level orchestration.

Knowledge graph as an authoritative semantic layer

Choose Stardog when the graph acts as a system of record for meaning, not merely a query accelerator. This includes domains where explainability, traceability, and governance matter, and where schemas evolve through controlled ontology changes in place of ad-hoc mutations.

Neo4j better serves graphs that directly drive application behavior and transaction flow, whereas Stardog for graphs that define shared understanding across systems, teams, or organizations.

When to Use Neo4j

High-throughput transactional graph workloads

Use Neo4j if your system performs frequent writes and requires predictable transaction latency. Neo4j’s native property graph storage and ACID transaction model support continuous updates without inference overhead. Writes route through a single leader per database and replicate through Raft with explicit and bounded transaction ordering.

Stardog supports transactions but includes reasoning and semantic validation into the data path. Despite adding semantic accuracy, it adds cost for mutation-heavy workloads with dominating writes.

Deep traversals on live, connected data

Neo4j fits workloads dominated by multi-hop traversals over changing graphs. Relationships store as first-class records with direct pointers, so traversal cost scales with the explored subgraph. It satisfies use cases that depend on path expansion, neighborhood exploration, and graph-shaped access patterns at runtime.

Stardog executes graph queries through triple pattern matching and optional inference, which does not favor latency-sensitive traversal on hot data.

Application-owned graph models

Choose Neo4j when a single application or platform owns the graph schema and controls how data evolves. Labels, relationship types, and properties act as application-level contracts rather than shared ontologies. Teams can evolve the model quickly without coordinating ontology changes across organizations.

Stardog assumes shared meaning across datasets and teams. Its advantage eventuates when schemas represent concepts that outlive individual applications.

Developer-centric query workflows

Neo4j’s Cypher language expresses graph logic using explicit path patterns and variables. It closely resembles how developers reason about relationships and flows. Query structure remains readable as graphs grow in size and complexity, more so for traversal-heavy logic embedded in services.

Stardog’s SPARQL prioritizes standards compliance and formal semantics. You get interoperability but at the same time need deeper RDF and ontology expertise, which can decelerate iteration for application teams.

Operational graphs driving runtime decisions

Neo4j works well when the graph directly participates in request-time decisions. The clustering architecture separates write coordination from read scaling and supports causal consistency for read-after-write behavior. This allows applications to treat the cluster as a single logical system while distributing load across replicas.

Stardog more often acts as a semantic integration layer that downstream systems query; Neo4j situates with graphs that sit directly in the request path.

Which Is the Better Fit: Stardog or Neo4j?

Choose Stardog When Meaning Comes First

Opt for Stardog when the graph acts as a shared semantic layer. RDF and OWL encode meaning explicitly, and reasoning makes implied facts queryable without application logic. And for your use case, you prefer correctness, ontology alignment, and long-term schema stability over traversal latency.

Choose Neo4j When the Graph Is Operational

Choose Neo4j when the graph drives live application behavior. Native storage and index-free adjacency favor fast, multi-hop traversals over frequently changing data. Cypher expresses traversal logic directly, with semantics enforced in application code.

So to summarize, choose Stardog if most of these apply:

- Semantics must be enforced by the database.

- Data spans multiple systems and schemas.

- The graph serves as a long-lived knowledge layer.

And select Neo4j if:

- Traversal latency dominates query cost.

- The graph sits on the request path.

- Schema evolution follows application needs.

Why Consider PuppyGraph as an Alternative

Stardog is built for RDF graphs, which is ideal when you need explicit semantics, ontology alignment, and reasoning. But many organizations still prefer a property graph for knowledge graphs because the model is simpler, and querying entity attributes and relationships feels more natural for day-to-day analytics and application needs.

Neo4j offers that property graph experience, but it often comes with a full materialization workflow, plus ongoing ETL and sync to keep the graph current.

PuppyGraph strikes the balance by keeping the property graph model while letting teams stand it up directly on existing tables, without maintaining a separate graph pipeline.

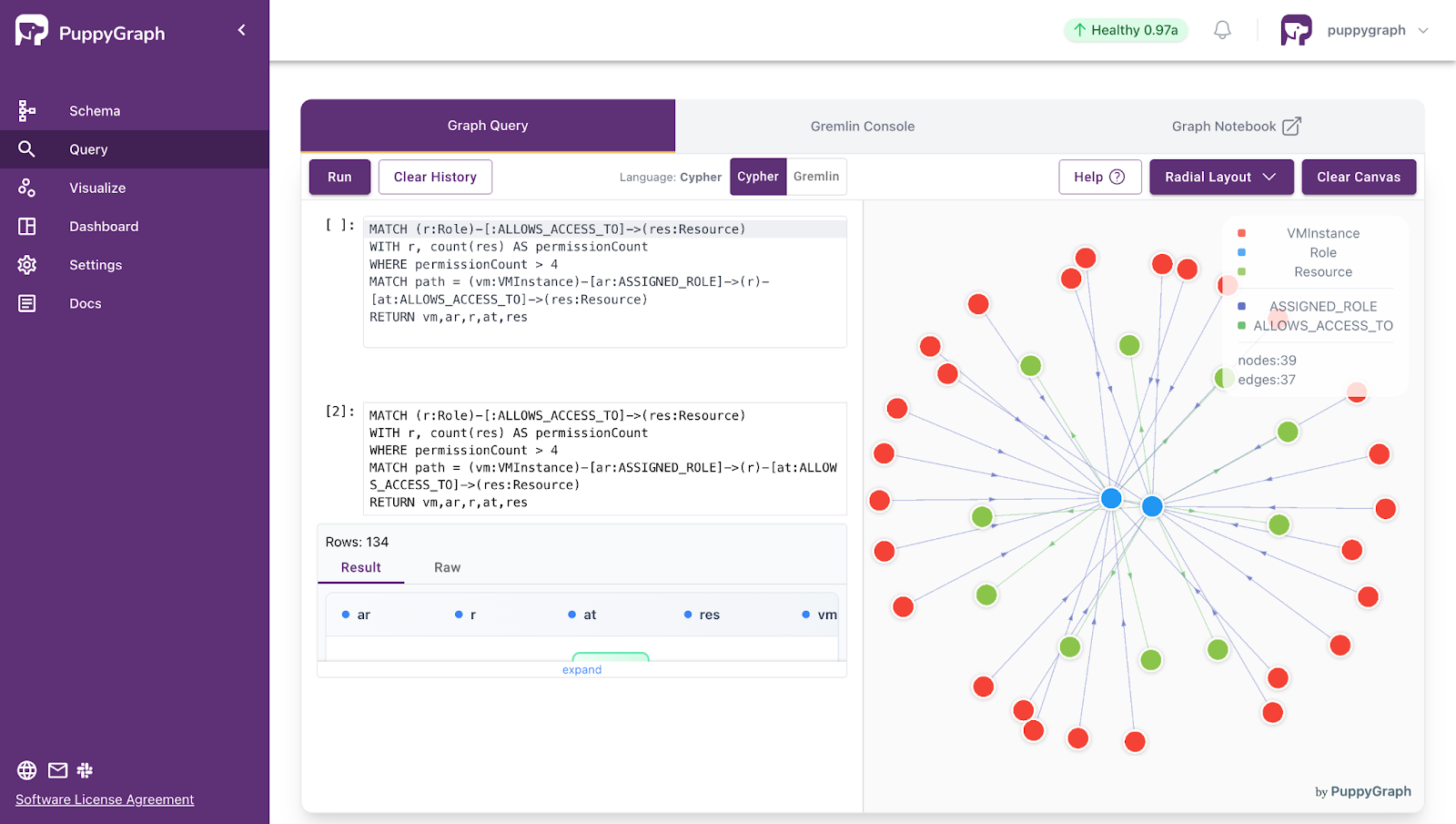

PuppyGraph acts as a real-time graph query engine over existing relational databases and data lakes. It builds a graph abstraction at query time, without ETL pipelines or data duplication.

PuppyGraph connects directly to systems you already use: Apache Iceberg, Delta Lake, Apache Hudi, MySQL, PostgreSQL, and DuckDB. Graph schemas map relationships from source tables using metadata, allowing teams to define and revise graph views without rebuilding pipelines or copying data into a separate graph store.

Here are some of PuppyGraph’s key capabilities:

- Zero ETL graph querying

PuppyGraph runs queries directly on source systems. Data remains in place, governed by existing access controls and update processes.

- No duplicated storage

Because data is not ingested into a graph database, teams avoid maintaining parallel datasets, indexes, and security policies.

- Real-time results

Queries operate on live data rather than periodically refreshed graph snapshots. This avoids staleness when underlying tables change frequently.

- Distributed query execution

PuppyGraph uses a distributed compute engine with parallel and vectorized execution to support deep traversals over large datasets.

- Graph and SQL on the same data

Teams continue to use SQL engines for tabular analysis while using PuppyGraph for relationship-heavy queries, without forcing all workloads into a single system.

- Lower total cost of ownership:

Traditional graph databases introduce additional cost through ETL pipelines, duplicated storage, and high-memory infrastructure tuned for traversal performance. PuppyGraph avoids these costs by querying existing data stores directly, removing the need for a separate graph storage layer, parallel governance, or specialized hardware.

- Flexible graph modeling

Multiple graph schemas can coexist over the same source tables. Models evolve independently of data ingestion, supporting iterative analysis.

- Standard graph query languages

PuppyGraph supports openCypher and Gremlin, along with built-in visualization tools for interactive exploration.

- Proven at Enterprise scale

Half of the top 20 cybersecurity companies already leverage PuppyGraph, as well as engineering-driven enterprises like AMD and Coinbase. Whether it’s multi-hop security reasoning, asset intelligence, or deep relationship queries across massive datasets, these teams trust PuppyGraph to replace slow ETL pipelines and complex graph stacks with a simpler, faster architecture.

As your data grows more complex, the most valuable insights will often lie in how entities relate. PuppyGraph brings those insights to the surface, whether you’re modeling organizational networks, social introductions, fraud and cybersecurity graphs, or GraphRAG pipelines that trace knowledge provenance.

Deployment is simple: download the free Docker image, connect PuppyGraph to your existing data stores, define graph schemas, and start querying. You can deploy PuppyGraph through Docker, AWS AMI, GCP Marketplace, or within a VPC or data center for full data control.

Conclusion

The most practical next step is to audit your graph’s bearing and characteristics. If your graph workloads run on top of relational or lakehouse data, consider whether you can rationalize a separate graph store. There are realistic appeals for teams to use and pay for both Stardog and Neo4j, or a similar combination, and the interface between them. However, as we’ve discussed, it results in complex ETL pipelines, duplicated data, and swelling infrastructure costs. And it’s impractical to attempt stretching one platform to cover another one’s responsibilities.

So if you need deep graph queries on your existing, live data without rebuilding the entire stack, evaluate PuppyGraph.

Explore the forever-free PuppyGraph Developer Edition, or book a demo to see it in action.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install