GraphDB vs Neo4j: Key Differences Explained

Graph databases promise to lessen complexity by treating relationships as first-class data. To that end, Neo4j optimizes for speed and immediacy: labeled property graphs, index-free adjacency, and a traversal engine to answer multi-hop questions post-haste. For use cases like fraud detection, recommendations, and identity graphs, this method feels natural, fast, and oftentimes sufficient.

However, as data disseminates across teams, vendors, and domains, flexibility alone does not suffice. You need shared meaning. Ontologies, global identifiers, and formal semantics become consequential to answer not only the connections, but what must be true. To fit such criteria, GraphDB trades traversal speed for RDF, SPARQL, and built-in reasoning that prioritize interoperability and logical consistency.

By comparing Neo4j and GraphDB across data models, execution behavior, and real workloads, this article helps you choose the graph that fits the problem you’re actually solving.

What Is GraphDB?

GraphDB is an enterprise-grade RDF database designed for knowledge graphs, accenting semantics, interoperability, and logical correctness. It doesn’t target operational graph workloads but focuses on standards-based data integration, metadata management, and AI-ready knowledge systems. It implements W3C specifications end to end and is built to serve as long-lived semantic infrastructure.

RDF as the Core Data Model

GraphDB stores data as RDF triples: subject, predicate, object; each element is identified by a globally unique IRI. This makes it possible to merge data from many systems without identifier conflicts, a critical use case in large organizations where schemas overlap, containing datasets different teams, vendors, or public sources produce.

Contrary to property graphs, RDF deliberately avoids embedding structure-specific assumptions. Relationships are first-class predicates rather than edges with implicit semantics. This makes RDF slower to traverse but far more resilient when schemas evolve or overlap across domains, making it a cogent fit for cross-domain knowledge modeling.

Ontologies, Reasoning, and Inference

GraphDB’s defining capability is its built-in reasoning engine. Ontologies that RDFS and OWL define establish formal semantics for classes, properties, hierarchies, and constraints. These ontologies actively shape the validity of the data and the new facts you can derive.

GraphDB applies forward-chaining reasoning at ingest time. As triples are written, the engine evaluates them against the configured ruleset and materializes all implied statements into storage. Subclass hierarchies, property inheritance, transitive relations, equivalence mappings, and domain or range implications become concrete data. Queries run against a logically closed graph, which keeps performance predictable and avoids runtime reasoning costs.

Just as important is consistency enforcement. GraphDB can reject writes that violate ontology rules, for example, disjoint classes, functional properties, and invalid cardinalities. In the event of a violation, the transaction fails and rolls back to prevent semantic drift. Neo4j supports uniqueness and existence constraints, but it can’t enforce logical consistency or extract new knowledge at the database layer.

Querying with SPARQL and RDF-star

GraphDB uses SPARQL 1.1 for querying, supporting complex pattern matching, aggregation, and federation across remote endpoints. SPARQL’s execution model treats queries as algebra over triples, instead of paths through a graph. The queries remain stable with schema evolution because predicates carry explicit meaning through IRIs in place of positional context. So your queries are more resilient to structural drift, though less intuitive for traversal-heavy workloads.

With RDF-star and SPARQL-star, GraphDB can attach metadata directly to statements without reifying triples into auxiliary nodes. RDF-star allows triples themselves to appear as subjects or objects of other triples. GraphDB natively stores these embedded statements for efficient queries over provenance, confidence scores, temporal validity, or source attribution, without exploding the graph size or complicating query structure. This is a significant feature in, for example, legal, scientific, and regulatory datasets.

Designed for Semantic Integration

Environments requiring data longevity, explainability, and standards compliance make for perfect use cases of GraphDB. It’s commonly used in life sciences, publishing, government, and enterprise knowledge platforms; in these systems, correctness, interoperability, and reasoning supercede millisecond-level traversal latency.

What Is Neo4j?

Neo4j is a native graph database built for fast traversal over highly connected data. It popularized the Labeled Property Graph (LPG) model and remains a popular choice for operational graph workloads prioritizing low latency, frequent updates, and developer productivity.

The Labeled Property Graph Model

Neo4j is built around the Labeled Property Graph (LPG) model that contains two core record types: nodes and relationships. Nodes represent entities and carry zero or more labels plus key–value properties. Relationships always connect two nodes, have a direction and a required type, and can also store properties. Storing metadata directly on relationships allows teams to model events and interactions without introducing intermediate nodes just to hold attributes.

Labels play an active role in query planning and execution. Most schemas use labels to narrow the starting set of nodes (for example, :Person) and indexes on a small number of properties such as an email or ID. From there, queries expand through typed relationships, often using variable-length patterns to express multi-hop logic. This encourages explicit, domain-level edges like :PURCHASED, :TRANSFERRED_TO, or :FRIEND_OF instead of generic predicates with values.

Although Neo4j is schema-optional, teams usually introduce constraints like uniqueness, existence, or type checks once the model settles.

Index-Free Adjacency and Traversal Performance

Relationships are stored as first-class records that directly reference their start and end nodes. Once a traversal begins, the engine follows in-memory references instead of consulting indexes or join structures.

This design shows its strength as traversals grow beyond a few hops. In relational or triple-store systems, cost rises with join complexity and intermediate results. In Neo4j, cost doesn’t scale with total graph size but mainly with branching factor and depth. That makes traversal latency more predictable for real-time workloads that need to explore five to ten hops under tight SLAs.

Neo4j intentionally trades off global semantic enforcement for local structure and execution speed. Meaning, in place of being imposed on by the database, comes from topology and application logic.

Cypher, GQL, and Query Execution

Cypher is a declarative language designed around graph patterns. The query directly expresses nodes, relationships, directionality, and variable-length paths, thereby making the intent readable and closely oriented with execution.

Recent releases align Cypher with the ISO GQL standard, positioning Neo4j as a reference implementation for property-graph querying. This improves long-term portability while preserving Cypher’s core execution model: path expansion, filtering, and aggregation over explicit relationships.

Cypher operates strictly on explicit data. No built-in inference, inheritance, or ontology expansion exists at query time. That keeps execution deterministic and fast, but it also means semantic interpretation must live in queries or upstream modeling.

Transactional Guarantees and Write Behavior

Neo4j is tuned for mutation-heavy workloads. Writes are ACID-compliant and optimized to complete quickly under concurrent load. Constraints exist for uniqueness, existence, and type checks, but they are deliberately lightweight.

Because Neo4j doesn’t materialize inferred data, writes don’t trigger cascading recomputation. You can add, remove, or update edges and properties continuously forgoing global side effects. Contrarily, systems with inference pipelines must re-evaluate rules on ingest; it strengthens guarantees but slows writes.

Neo4j is therefore a good fit for protean graph systems and the likes where operations, not semantics, determine authenticity.

Built for Operational Graph Applications

Neo4j performs best when the graph sits on the request path. Its architecture supports millisecond-level queries under high concurrency, befitting fraud detection, recommendation engines, real-time personalization, and network analysis.

In these systems, the graph answers situational questions: who is connected right now, or which paths exist at this moment; the graph doesn’t act as a canonical source of truth. Formal semantics and ontology validation are usually unnecessary overhead.

Core Differences Between GraphDB vs Neo4j

When to Use GraphDB

Formal Semantics and Built-In Reasoning

Choose GraphDB when you must encode meaning explicitly and have the database enforce it. Its native RDFS and OWL reasoning materializes inferred facts and blocks inconsistent writes at commit time. Neo4j can represent hierarchies, but reasoning over them usually lives in queries or application code, potentially debilitating assurances as the graph changes.

Integrating Data Across Systems and Organizations

GraphDB works well when data comes from many sources with overlapping or conflicting schemas. Globally unique IRIs let entities from different systems merge cleanly into one graph. Neo4j depends on internal identifiers and conventions; they are effective inside a single application but fragile when data needs to interoperate across vendors or public datasets.

Standards-Based Querying and Federation

Consider if your architecture depends on W3C standards, SPARQL federation, or long-term portability; then GraphDB is the safer recourse. SPARQL can query multiple remote endpoints as part of a single logical query. Neo4j’s Fabric, although supports sharding and routing, doesn't provide protocol-level federation with external graph systems.

Knowledge Graph as a System of Record

GraphDB fits use cases that entail correctness, explainability, and governance over any other concern. Domains like life sciences, publishing, government, and regulation benefit from ontology validation, inference, and semantic search. Neo4j is optimized for fast traversal and frequent updates, and so less suited as an authoritative semantic foundation.

When to Use Neo4j

Fast, Deep Traversals on Live Data

Choose Neo4j when your workload relies on multi-hop traversals over frequently changing data. Its index-free adjacency keeps traversal cost proportional to the subgraph you explore. GraphDB’s triple-pattern execution and inference pipeline add overhead that makes it less suited for latency-sensitive, traversal-heavy queries.

Building Transactional or Event-Driven Systems

Neo4j fits systems with high write rates, continuous updates, and tight latency budgets. Use cases like fraud detection, recommendations, and identity resolution benefit from its optimized write path and transactional guarantees. GraphDB concentrates on correctness and reasoning, increasing ingest cost and slows mutation-heavy workloads.

Developer-Centric Query Experience

Cypher’s pattern-based syntax makes it easier to express graph logic in a way that matches how developers think about relationships. SPARQL favors formal semantics and standards compliance, but it requires deeper RDF expertise, which can slow iteration for application teams.

Optimizing for Operational Graph Analytics

Neo4j works best when the graph directly drives application behavior rather than serving as a semantic reference layer. Its runtime optimizations, alignment with GQL, and support for vector-based workflows make it well suited for GraphRAG and real-time decisioning. GraphDB integrates with AI pipelines, but its core strength remains semantic enrichment.

Which Is the Better Fit: GraphDB or Neo4j?

The right choice between these two systems follows from your domain invariants and query patterns.

Choosing GraphDB

Pick GraphDB when the graph functions as a knowledge contract across teams and over time. RDF’s global IRIs let you merge datasets without inventing new identity schemes, and ontologies encode domain rules as formal semantics rather than application conventions. Forward-chaining reasoning materializes implied facts at ingest, producing stable, explainable results even for inconsistent upstream data. If your roadmap includes regulated data, FAIR interoperability, or a single authoritative source for entities and taxonomies, GraphDB removes ambiguity that Neo4j can’t enforce at the database layer.

Choosing Neo4j

Go with Neo4j when users and services depend on fast, multi-hop traversals over live data. The LPG model maps cleanly to product behavior: edges carry properties, relationships stay explicit, and schemas evolve naturally. Index-free adjacency keeps traversal cost proportional to the explored subgraph, support use cases like fraud detection, identity resolution, and real-time recommendations. GraphDB can represent the same facts, but its triple-pattern execution and inference-heavy ingest typically add overhead that request-path systems feel first.

So to summarize, choose GraphDB if most of these apply:

- You need RDFS or OWL reasoning and queries must include implied facts.

- Data spans multiple systems and requires global identifiers (IRIs) and standards-based interchange.

- You must enforce semantic constraints as part of governance.

- You expect federated SPARQL queries across internal and external datasets.

Choose Neo4j if most of these apply:

- The graph drives real-time decisions and traversal latency dominates cost.

- You need first-class edge properties with frequent updates.

- Your team benefits from a developer-centric pattern language (Cypher/GQL) and fast iteration.

- Semantic meaning can live in application logic.

Why Consider PuppyGraph as an Alternative

Neither system is ideal when the graph isn't a greenfield. GraphDB’s inference and governance shift cost to ingestion and modeling, potentially thwarting expeditious changes. Neo4j’s advantages in operations often come with ETL and duplication overhead when data already resides in warehouses or lakehouses; teams copy data into a separate graph, run pipelines, and reconcile access controls across stacks. At that point, the graph becomes another system to operate.

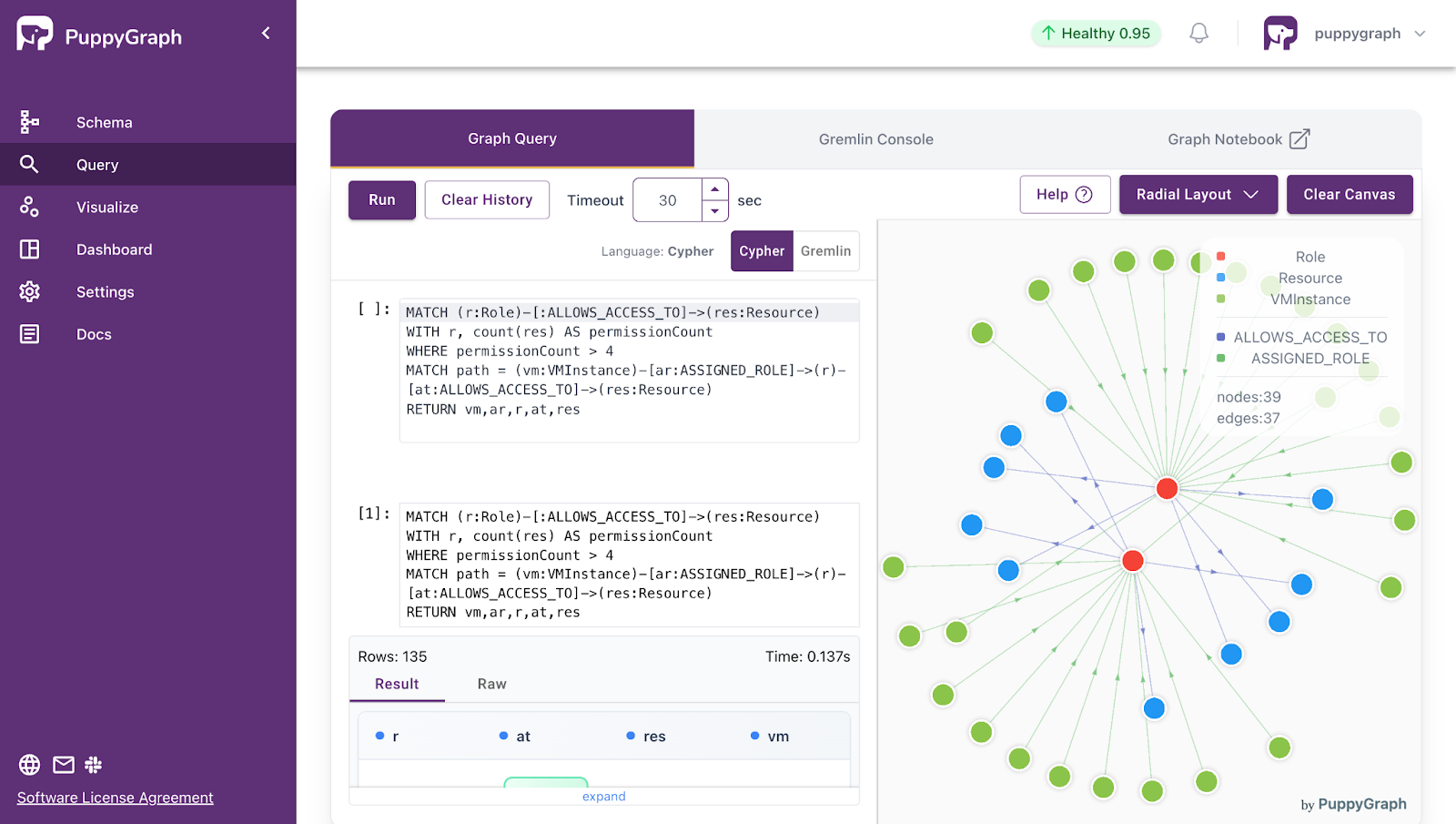

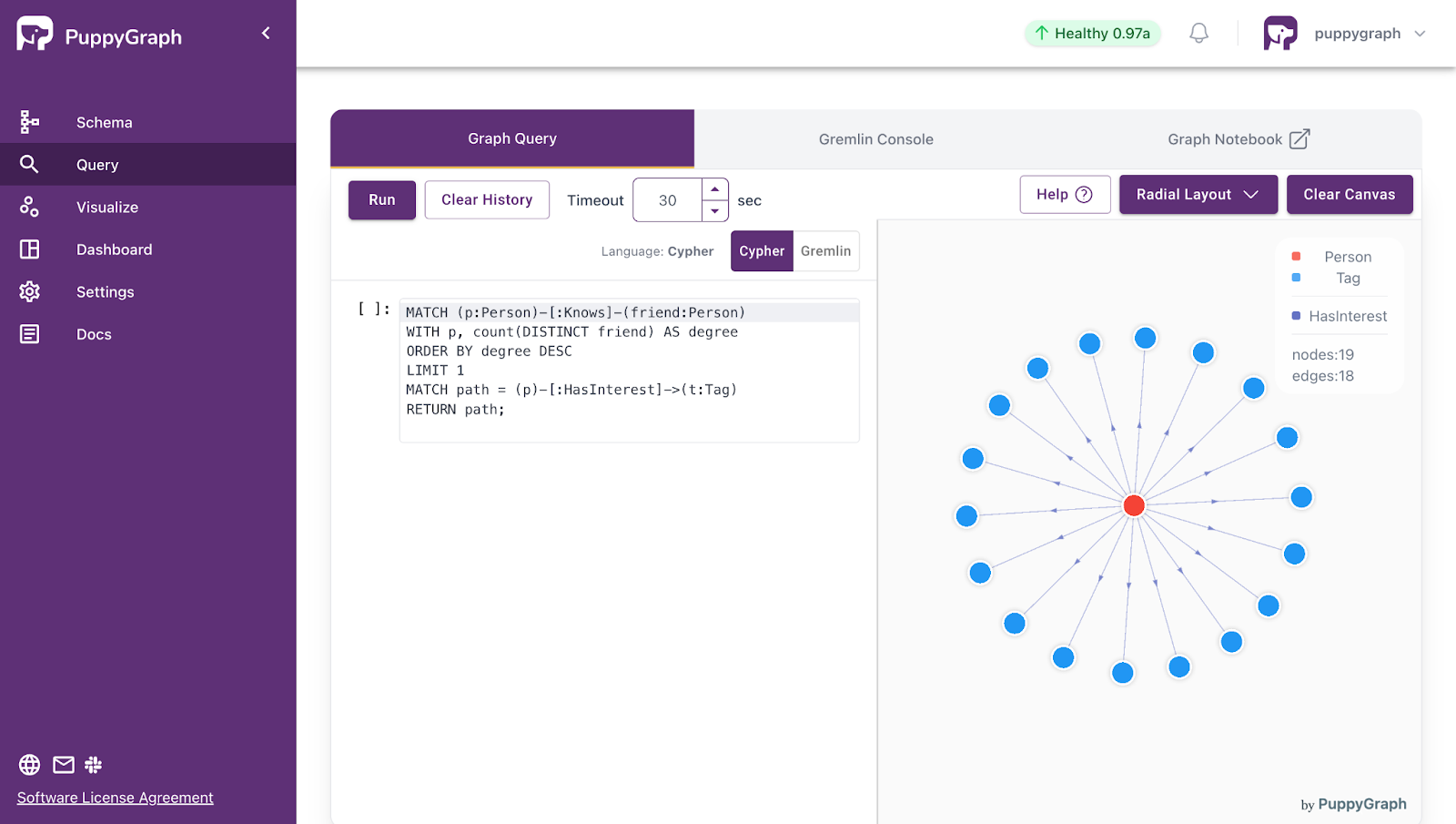

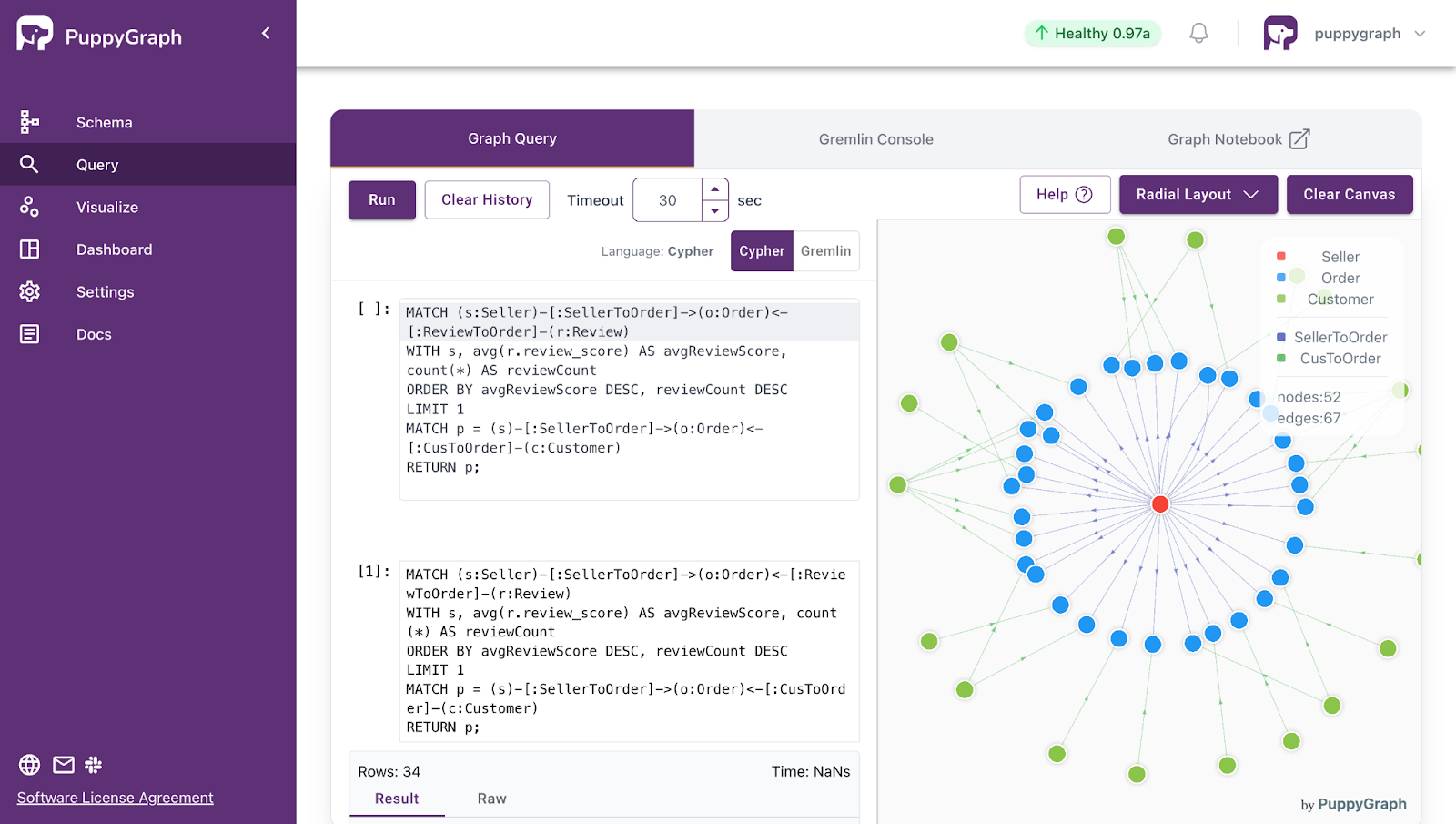

PuppyGraph runs as a real-time, zero-ETL graph query engine on top of existing relational databases and data lakes. You define graph views over tables in systems like PostgreSQL, MySQL, DuckDB, and lakehouse formats such as Iceberg, Hudi, and Delta Lake, then query relationships directly, all without copying data or maintaining pipelines.

Here are the key PuppyGraph capabilities:

- Zero ETL: PuppyGraph runs as a query layer on top of your existing data, without any pipelines to build or maintain. You can start querying relationships in minutes.

- No Data Duplication: Data stays where it is. You don’t need to copy large datasets into a separate graph store, helping preserve consistency and reuse existing access controls.

- Real-Time Analysis: Queries run on live data, so results reflect the current state of the system. Users report multi-hop queries, six hops across billions of edges, returning in under a few seconds.

- Scalable Performance: The distributed execution engine scales with your cluster. It supports deep traversals and large datasets through parallel execution and vectorized processing.

- SQL and Graph Together: Teams can keep using SQL engines for tabular analysis and PuppyGraph for relationship-heavy queries, all on the same source tables. You don’t need to force every workload into a graph database or retrain teams on a new system.

- Lower Total Cost of Ownership: Traditional graph stacks add cost through ETL pipelines, duplicated storage, separate governance, and memory-heavy infrastructure. PuppyGraph avoids these by querying data in place, with no second system to operate or secure.

- Flexible, Iterative Modeling: Metadata-driven schemas allow multiple graph views over the same data. Models can evolve quickly without rebuilding pipelines, supporting fast iteration.

- Standard Querying and Visualization: PuppyGraph supports common graph query languages like openCypher and Gremlin, in addition to built-in visualization tools for interactive exploration.

- Proven at Enterprise Scale: Many large cybersecurity companies and engineering-focused organizations, like AMD and Coinbase, use PuppyGraph. These teams trust PuppyGraph for security analysis, asset intelligence, and deep relationship queries without the overhead of ETL-heavy graph architectures.

As data systems grow more complex, the definitive insight often comes from how entities connect. PuppyGraph allows you to easily explore those relationships, whether you’re modeling organizational networks, social interactions, fraud and cybersecurity graphs, or building GraphRAG pipelines that track where knowledge comes from.

To get started, download the free Docker image, connect PuppyGraph to your existing data stores, define your graph schema, and start querying. PuppyGraph runs in Docker, an AWS AMI, through the GCP Marketplace, or inside your own VPC or data center if you need full control over data and networking.

Conclusion

Relationship questions are spreading across teams: security, data, product, and AI workflows now depend on connected data staying both correct and fast. In time, there will be more pipelines, more duplication, and more disagreement about “what’s true.”

Your data likely already lives in warehouses and lakehouses, and duplicating it into a separate graph system can become the biggest cost on the roadmap. PuppyGraph brings graph querying to the data you already trust, without ETL, without copies, and without a second platform to maintain.

To try it out for yourself, download PuppyGraph's forever free Developer edition or book a free demo.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install